PyPy 2.6.0 release

PyPy 2.6.0 - Cameo Charm

- Py3k (supporting Python 3.x): We have released a Python 3.2.5 compatible version we call PyPy3 2.4.0, and are working toward a Python 3.3 compatible version

- STM (software transactional memory): We have released a first working version, and continue to try out new promising paths of achieving a fast multithreaded Python

- NumPy which requires installation of our fork of upstream numpy, available on bitbucket

What is PyPy?

Highlights

- Python compatibility:

- Improve support for TLS 1.1 and 1.2

- Windows downloads now package a pypyw.exe in addition to pypy.exe

- Support for the PYTHONOPTIMIZE environment variable (impacting builtin’s __debug__ property)

- Issues reported with our previous release were resolved after reports from users on our issue tracker at https://foss.heptapod.net/pypy/pypy/-/issues or on IRC at #pypy.

- New features:

- Add preliminary support for a new lightweight statistical profiler vmprof, which has been designed to accomodate profiling JITted code

- Numpy:

- Support for

objectdtype via a garbage collector hook - Support for .can_cast and .min_scalar_type as well as beginning a refactoring of the internal casting rules

- Better support for subtypes, via the __array_interface__, __array_priority__, and __array_wrap__ methods (still a work-in-progress)

- Better support for ndarray.flags

- Support for

- Performance improvements:

- Slight improvement in frame sizes, improving some benchmarks

- Internal refactoring and cleanups leading to improved JIT performance

- Improved IO performance of

zlibandbz2modules - We continue to improve the JIT’s optimizations. Our benchmark suite is now over 7 times faster than cpython

Cheers

The PyPy Team

CFFI 1.0.1 released

CFFI 1.0.1 final has now been released for CPython! CFFI is a (CPython and PyPy) module to interact with C code from Python.

The main news from CFFI 0.9 is the new way to build extension modules: the "out-of-line" mode, where you have a separate build script. When this script is executed, it produces the extension module. This comes with associated Setuptools support that fixes the headache of distributing your own CFFI-using packages. It also massively cuts down the import times.

Although this is a major new version, it should be fully backward-compatible: existing projects should continue to work, in what is now called the "in-line mode".

The documentation has been reorganized and split into a few pages. For more information about this new "out-of-line" mode, as well as more general information about what CFFI is and how to use it, read the Goals and proceed to the Overview.

Unlike the 1.0 beta 1 version (ffi.dlopen(), instead of only ffi.verify().

PyPy support: PyPy needs integrated support for efficient JITting, so you cannot install a different version of CFFI on top of an existing PyPy. You need to wait for the upcoming PyPy 2.6 to use CFFI 1.0---or get a nightly build.

My thanks again to the PSF (Python Software Foundation) for their financial support!

UPDATE:Bug with the first example "ABI out-of-line": variadic functions (like printf, ending in a "..." argument) crash. Fixed in CFFI 1.0.2.

Awesome! Thanks for this. I think is the best way to make extension modules for cpython and pypy.

CFFI 1.0 beta 1

Finally! CFFI 1.0 is almost ready. CFFI gives Python developers a convenient way to call external C libraries. Here "Python" == "CPython or PyPy", but this post is mostly about the CPython side of CFFI, as the PyPy version is not ready yet.

On CPython, you can download the version

"1.0.0b1" either by looking for the cffi-1.0 branch in

the repository, or by

saying

(Until 1.0 final is ready, pip install cffi will still give you version 0.9.2.)

The main news: you can now explicitly generate and compile a CPython C extension module from a "build" script. Then in the rest of your program or library, you no longer need to import cffi at all. Instead, you simply say:

from _my_custom_module import ffi, lib

Then you use ffi and lib just like you did in your verify()-based project in CFFI 0.9.2. (The lib is what used to be the result of verify().) The details of how you use them should not have changed at all, so that the rest of your program should not need any update.

Benefits

This is a big step towards standard practices for making and distributing Python packages with C extension modules:

- on the one hand, you need an explicit compilation step, triggered here by running the "build" script;

- on the other hand, what you gain in return is better control over when and why the C compilation occurs, and more standard ways to write distutils- or setuptools-based setup.py files (see below).

Additionally, this completely removes one of the main drawbacks of using CFFI to interface with large C APIs: the start-up time. In some cases it could be extreme on slow machines (cases of 10-20 seconds on ARM boards occur commonly). Now, the import above is instantaneous.

In fact, none of the pure Python cffi package is needed any more at runtime (it needs only an internal extension module from CFFI, which can be installed by doing "pip install cffi-runtime" [*] if you only need that). The ffi object you get by the import above is of a completely different class written entirely in C. The two implementations might get merged in the future; for now they are independent, but give two compatible APIs. The differences are that some methods like cdef() and verify() and set_source() are omitted from the C version, because it is supposed to be a complete FFI already; and other methods like new(), which take as parameter a string describing a C type, are faster now because that string is parsed using a custom small-subset-of-C parser, written in C too.

In practice

CFFI 1.0 beta 1 was tested on CPython 2.7 and 3.3/3.4, on Linux and to some extent on Windows and OS/X. Its PyPy version is not ready yet, and the only docs available so far are those below.

This is beta software, so there might be bugs and details may change. We are interested in hearing any feedback (irc.freenode.net #pypy) or bug reports.

To use the new features, create a source file that is not imported by the rest of your project, in which you place (or move) the code to build the FFI object:

# foo_build.py

import cffi

ffi = cffi.FFI()

ffi.cdef("""

int printf(const char *format, ...);

""")

ffi.set_source("_foo", """

#include <stdio.h>

""") # and other arguments like libraries=[...]

if __name__ == '__main__':

ffi.compile()

The ffi.set_source() replaces the ffi.verify() of CFFI 0.9.2. Calling it attaches the given source code to the ffi object, but this call doesn't compile or return anything by itself. It may be placed above the ffi.cdef() if you prefer. Its first argument is the name of the C extension module that will be produced.

Actual compilation (including generating the complete C sources) occurs later, in one of two places: either in ffi.compile(), shown above, or indirectly from the setup.py, shown next.

If you directly execute the file foo_build.py above, it will generate a local file _foo.c and compile it to _foo.so (or the appropriate extension, like _foo.pyd on Windows). This is the extension module that can be used in the rest of your program by saying "from _foo import ffi, lib".

Distutils

If you want to distribute your program, you write a setup.py using either distutils or setuptools. Using setuptools is generally recommended nowdays, but using distutils is possible too. We show it first:

# setup.py

from distutils.core import setup

import foo_build

setup(

name="example",

version="0.1",

py_modules=["example"],

ext_modules=[foo_build.ffi.distutils_extension()],

)

This is similar to the CFFI 0.9.2 way. It only works if cffi was installed previously, because otherwise foo_build cannot be imported. The difference is that you use ffi.distutils_extension() instead of ffi.verifier.get_extension(), because there is no longer any verifier object if you use set_source().

Setuptools

The modern way is to write setup.py files based on setuptools, which can (among lots of other things) handle dependencies. It is what you normally get with pip install, too. Here is how you'd write it:

# setup.py

from setuptools import setup

setup(

name="example",

version="0.1",

py_modules=["example"],

setup_requires=["cffi>=1.0.dev0"],

cffi_modules=["foo_build:ffi"],

install_requires=["cffi-runtime"], # see [*] below

)

Note that "cffi" is mentioned on three lines here:

- the first time is in setup_requires, which means that cffi will be locally downloaded and used for the setup.

- the second mention is a custom cffi_modules argument. This argument is handled by cffi as soon as it is locally downloaded. It should be a list of "module:ffi" strings, where the ffi part is the name of the global variable in that module.

- the third mention is in install_requires. It means that in order to install this example package, "cffi-runtime" must also be installed. This is (or will be) a PyPI entry that only contains a trimmed down version of CFFI, one that does not include the pure Python "cffi" package and its dependencies. None of it is needed at runtime.

[*] NOTE: The "cffi-runtime" PyPI entry is not ready yet. For now, use "cffi>=1.0.dev0" instead. Considering PyPy, which has got a built-in "_cffi_backend" module, the "cffi-runtime" package could never be upgraded there; but it would still be nice if we were able to upgrade the "cffi" pure Python package on PyPy. This might require some extra care in writing the interaction code. We need to sort it out now...

Thanks

Special thanks go to the PSF (Python Software Foundation) for their financial support, without which this work---er... it might likely have occurred anyway, but at an unknown future date :-)

(For reference, the amount I asked for (and got) is equal to one month of what a Google Summer of Code student gets, for work that will take a bit longer than one month. At least I personally am running mostly on such money, and so I want to thank the PSF again for their contribution to CFFI---and while I'm at it, thanks to all other contributors to PyPy---for making this job more than an unpaid hobby on the side :-)

Armin Rigo

This is great news! We're loving using CFFI via cryptography and PyOpenSSL.

An easier way to install cffi 1.0 beta releases is with

pip install --pre cffi

The --pre flag indicates pre-releases are acceptable for installation.

PyPy-STM 2.5.1 released

PyPy-STM 2.5.1 - Mawhrin-Skel

We're pleased to announce PyPy-STM 2.5.1, codenamed Mawhrin-Skel. This is the second official release of PyPy-STM. You can download this release here (64-bit Linux only):

https://pypy.org/download.html

Documentation:

https://pypy.readthedocs.org/en/latest/stm.html

PyPy is an implementation of the Python programming language which focuses on performance. So far we've been relentlessly optimizing for the single core/process scenario. PyPy STM brings to the table a version of PyPy that does not have the infamous Global Interpreter Lock, hence can run multiple threads on multiple cores. Additionally it comes with a set of primitives that make writing multithreaded applications a lot easier, as explained below (see TransactionQueue) and in the documentation.

Internally, PyPy-STM is based on the Software Transactional Memory plug-in called stmgc-c7. This version comes with a relatively reasonable single-core overhead but scales only up to around 4 cores on some examples; the next version of the plug-in, stmgc-c8, is in development and should address that limitation (as well as reduce the overhead). These versions only support 64-bit Linux; we'd welcome someone to port the upcoming stmgc-c8 to other (64-bit) platforms.

This release passes all regular PyPy tests, except for a few special cases. In other words, you should be able to drop in PyPy-STM instead of the regular PyPy and your program should still work. See current status for more information.

This work was done by Remi Meier and Armin Rigo. Thanks to all donors for crowd-funding the STM work so far! As usual, it took longer than we would have thought. I really want to thank the people that kept making donations anyway. Your trust is greatly appreciated!

What's new?

Compared to the July 2014 release, the main addition is a way to get reports about STM conflicts. This is an essential new feature.

To understand why this is so important, consider that if you already played around with the previous release, chances are that you didn't get very far. It probably felt like a toy: on very small examples it would nicely scale, but on any larger example it would not scale at all. You didn't get any feedback about why, but the underlying reason is that, in a typical large example, there are some STM conflicts that occur all the time and that won't be immediately found just by thinking. This prevents any parallelization.

Now PyPy-STM is no longer a black box: you have a way to learn about these conflicts, fix them, and try again. The tl;dr version is to run:

PYPYSTM=stmlog ./pypy-stm example.py

./print_stm_log.py stmlog

More details in the STM user guide.

Performance

The performance is now more stable than it used to be. More precisely, the best case is still "25%-40% single-core slow-down with very good scaling up to 4 threads", but the average performance seems not too far from that. There are still dark spots --- notably, the JIT is still slower to warm up, though it was improved a lot. These are documented in the current status section. Apart from that, we should not get more than 2x single-core slow-down in the worst case. Please report such cases as bugs!

TransactionQueue

As explained before, PyPy-STM is more than "just" a Python without GIL. It is a Python in which you can do minor tweaks to your existing, non-multithreaded programs and get them to use multiple cores. You identify medium- or large-sized, likely-independent parts of the code and to ask PyPy-STM to run these parts in parallel. An example would be every iteration of some outermost loop over all items of a dictionary. This is done with a new API: transaction.TransactionQueue(). See help(TransactionQueue) or read more about it in the STM user guide.

This is not a 100% mechanical change: very likely, you need to hunt for and fix "STM conflicts" that prevent parallel execution (see docs). However, at all points your program runs correctly, and you can stop the hunt when you get acceptable performance. You don't get deadlocks or corrupted state.

Thanks for reading!

Armin, Remi, Fijal

From your explanation in this post, STM sounds similar to OpenMP. Can you explain the differences?

→ https://openmp.org/wp/openmp-specifications/

This is explained in https://pypy.readthedocs.org/en/latest/stm.html#how-to-write-multithreaded-programs-the-10-000-feet-view

Nice - thanks!

»TransactionQueue is in part similar: your program needs to have “some chances” of parallelization before you can apply it. But I believe that the scope of applicability is much larger with TransactionQueue than with other approaches. It usually works without forcing a complete reorganization of your existing code, and it works on any Python program which has got latent and imperfect parallelism. Ideally, it only requires that the end programmer identifies where this parallelism is likely to be found«

If I understand that correctly, for STM the parallelism only needs to be likely and can be imperfect, because it can recover from errors.

This would fix a whole class of problems I experienced in OpenMP Fortran code: Turning a crash or (worse) undefined behavior into a mere performance loss - and that’s really cool!

Thank you for working on that!

the alternative is to ask for money for stuff that already works, and that's a terrible strategy. suggest better alternatives

Your comment suggests PyPy-STM doesn't actually work for you. If you have found a bug, please contribute a bug report, even if only if you have an example of program that should parallelize and doesn't; such bug reports are very useful. Alternatively, you're complaining that PyPy-STM is useless for you. Maybe I've been bad at explaining what you should expect and not expect from it in the first place, so I've given you wrong expectations. In that case, sorry. (The 3rd alternative would be that you're just trolling, but let's discard it for now.)

PyPy 2.5.1 Released

PyPy 2.5.1 - Pineapple Bromeliad

We’re pleased to announce PyPy 2.5.1, Pineapple Bromeliad following on the heels of 2.5.0. You can download the PyPy 2.5.1 release here:We would like to thank our donors for the continued support of the PyPy project, and for those who donate to our three sub-projects, as well as our volunteers and contributors. We’ve shown quite a bit of progress, but we’re slowly running out of funds. Please consider donating more, or even better convince your employer to donate, so we can finish those projects! The three sub-projects are:

-

- Py3k (supporting Python 3.x): We have released a Python 3.2.5 compatible version we call PyPy3 2.4.0, and are working toward a Python 3.3 compatible version

-

STM (software transactional memory): We have released a first working version, and continue to try out new promising paths of achieving a fast multithreaded Python

-

NumPy which requires installation of our fork of upstream numpy, available on bitbucket

What is PyPy?

PyPy is a very compliant Python interpreter, almost a drop-in replacement for CPython 2.7. It’s fast (pypy and cpython 2.7.x performance comparison) due to its integrated tracing JIT compiler.This release supports x86 machines on most common operating systems (Linux 32/64, Mac OS X 64, Windows, and OpenBSD), as well as newer ARM hardware (ARMv6 or ARMv7, with VFPv3) running Linux.

While we support 32 bit python on Windows, work on the native Windows 64 bit python is still stalling, we would welcome a volunteer to handle that.

Highlights

- The past months have seen pypy mature and grow, as rpython becomes the goto solution for writing fast dynamic language interpreters. Our separation of Rpython from the python interpreter PyPy is now much clearer in the PyPy documentation and we now have seperate RPython documentation. Tell us what still isn’t clear, or even better help us improve the documentation.

- We merged version 2.7.9 of python’s stdlib. From the python release notice:

- The entirety of Python 3.4’s ssl module has been backported. See PEP 466 for justification.

- HTTPS certificate validation using the system’s certificate store is now enabled by default. See PEP 476 for details.

- SSLv3 has been disabled by default in httplib and its reverse dependencies due to the POODLE attack.

- The ensurepip module has been backported, which provides the pip package manager in every Python 2.7 installation. See PEP 477.

- The garbage collector now ignores parts of the stack which did not change since the last collection, another performance boost

- errno and LastError are saved around cffi calls so things like pdb will not overwrite it

- We continue to asymptotically approach a score of 7 times faster than cpython on our benchmark suite, we now rank 6.98 on latest runs

- Issues reported with our previous release were resolved after reports from users on our issue tracker at https://foss.heptapod.net/pypy/pypy/-/issues or on IRC at #pypy.

Cheers

The PyPy Team

You mentioned about speed of PyPy over CPython. I'm interesting in memory footprint too in addition to speed up. Please, add to speed.pypy.org memory footprint metric. It's harder to find cheap and huge amount of memory for VPS than slow old cpu. Nice to know minimal memory requirements for django sites on pypy.

Is scores from speed.pypy.org applied to PyPy3 too? Later it was written PyPy3 was not fast as PyPy2.

Memory footprint is tricky to measure. PyPy usually starts at 60M (as opposed to say 6 for cpython), but then data structures are smaller. We'll try to get some measurements going on some point. Benchmarking is hard :-)

No, PyPy3 is not as fast as PyPy2. We should really look into it at some point.

Pydgin: Using RPython to Generate Fast Instruction-Set Simulators

Note: This is a guest blog post by Derek Lockhart and Berkin Ilbeyi from Computer Systems Laboratory of Cornell University.

In this blog post I'd like to describe some recent work on using the RPython translation toolchain to generate fast instruction set simulators. Our open-source framework, Pydgin [a], provides a domain-specific language (DSL) embedded in Python for concisely describing instruction set architectures [b] and then uses these descriptions to generate fast, JIT-enabled simulators. Pydgin will be presented at the IEEE International Symposium on Performance Analysis of Systems and Software (ISPASS) and in this post we provide a preview of that work. In addition, we discuss some additional progress updates that occurred after the publishing deadline and will not appear in the final paper [1].

Our area of research expertise is computer architecture, which is perhaps an unfamiliar topic for some readers of the PyPy blog. Below we provide some brief background on hardware simulation in the field of computer architecture, as well as some context as to why instruction set simulators in particular are such an important tool.

Simulators: Designing Hardware with Software

For computer architects in both academia and industry, a key step in designing new computational hardware (e.g., CPUs, GPUs, and mobile system-on-chips) is simulation [c] of the target system. While numerous models for simulation exist, three classes are particularly important in hardware design.

Functional Level models simulate the behavior of the target system. These models are useful for creating a "golden" reference which can serve as an executable specification or alternatively as an emulation platform for software development.

Cycle Level models aim to simulate both the behavior and the approximate timing of a hardware component. These models help computer architects explore design tradeoffs and quickly determine things like how big caches should be, how many functional units are needed to meet throughput targets, and how the addition of a custom accelerator block may impact total system performance.

Register-Transfer Level (RTL) models specify the behavior, timing, and resources (e.g., registers, wires, logic gates) of a hardware component. RTL models are bit-accurate hardware specifications typically written in a hardware description language (HDL) such as Verilog or VHDL. Once verified through extensive simulation, HDL specifications can be passed into synthesis and place-and-route tools to estimate area/energy/timing or to create FPGA or ASIC prototypes.

An instruction set simulator (ISS) is a special kind of functional-level model that simulates the behavior of a processor or system-on-chip (SOC). ISSs serve an important role in hardware design because they model the instruction set architecture (ISA) interface: the contractual boundary between hardware designers and software developers. ISSs allow hardware designers to quickly experiment with adding new processor instructions while also allowing software developers to build new compilers, libraries, and applications long before physical silicon is available.

Instruction-Set Simulators Must be Fast and Productive

Instruction-set simulators are more important than ever because the ISA boundary has become increasingly fluid. While Moore's law has continued to deliver larger numbers of transistors which computer architects can use to build increasingly complex chips, limits in Dennard scaling have restricted how these transistors can be used [d]. In more simple terms, thermal constraints (and energy constraints in mobile devices) have resulted in a growing interest in pervasive specialization: using custom accelerators to more efficiently perform compute intensive tasks. This is already a reality for designers of mobile SOCs who continually add new accelerator blocks and custom processor instructions in order to achieve higher performance with less energy consumption. ISSs are indispensable tools in this SOC design process for both hardware architects building the silicon and software engineers developing the software stack on top of it.

An instruction set simulator has two primary responsibilities: 1) accurately emulating the external execution behavior of the target, and 2) providing observability by accurately reproducing the target's internal state (e.g., register values, program counter, status flags) at each time step. However, other qualities critical to an effective ISS are simulation performance and designer productivity. Simulation performance is important because shorter simulation times allow developers to more quickly execute and verify large software applications. Designer productivity is important because it allows hardware architects to easily experiment with adding new instructions and estimate their impact on application performance.

To improve simulation performance, high-performance ISSs use dynamic binary translation (DBT) as a mechanism to translate frequently visited blocks of target instructions into optimized sequences of host instructions. To improve designer productivity, many design toolchains automatically generate ISSs from an architectural description language (ADL): a special domain-specific language for succinctly specifying instruction encodings and instruction semantics of an ISA. Very few existing systems have managed to encapsulate the design complexity of DBT engines such that high-performance, DBT-accelerated ISSs could be automatically generated from ADLs [e]. Unfortunately, tools which have done so are either proprietary software or leave much to be desired in terms of performance or productivity.

Why RPython?

Our research group learned of the RPython translation toolchain through our experiences with PyPy, which we had used in conjunction with our Python hardware modeling framework to achieve significant improvements in simulation performance [2]. We realized that the RPython translation toolchain could potentially be adapted to create fast instruction set simulators since the process of interpreting executables comprised of binary instructions shared many similarities with the process of interpreting bytecodes in a dynamic-language VM. In addition, we were inspired by PyPy's meta-tracing approach to JIT-optimizing VM design which effectively separates the process of specifying a language interpreter from the optimization machinery needed to achieve good performance.

Existing ADL-driven ISS generators have tended to use domain-specific languages that require custom parsers or verbose C-based syntax that distracts from the instruction specification. Creating an embedded-ADL within Python provides several benefits over these existing approaches including a gentler learning curve for new users, access to better debugging tools, and easier maintenance and extension by avoiding a custom parser. Additionally, we have found that the ability to directly execute Pydgin ISA descriptions in a standard Python interpreter such as CPython or PyPy significantly helps debugging and testing during initial ISA exploration. Python's concise, pseudocode-like syntax also manages to map quite closely to the pseudocode specifications provided by many ISA manuals [f].

The Pydgin embedded-ADL

Defining a new ISA in the Pydgin embedded-ADL requires four primary pieces of information: the architectural state (e.g. register file, program counter, control registers), the bit encodings of each instruction, the instruction fields, and the semantic definitions for each instruction. Pydgin aims to make this process as painless as possible by providing helper classes and functions where possible.

For example, below we provide a truncated example of the ARMv5 instruction encoding table. Pydgin maintains encodings of all instructions in a centralized encodings data structure for easy maintenance and quick lookup. The user-provided instruction names and bit encodings are used to automatically generate decoders for the simulator. Unlike many ADLs, Pydgin does not require that the user explicitly specify instruction types or mask bits for field matching because the Pydgin decoder generator can automatically infer decoder fields from the encoding table.

encodings = [ ['adc', 'xxxx00x0101xxxxxxxxxxxxxxxxxxxxx'], ['add', 'xxxx00x0100xxxxxxxxxxxxxxxxxxxxx'], ['and', 'xxxx00x0000xxxxxxxxxxxxxxxxxxxxx'], ['b', 'xxxx1010xxxxxxxxxxxxxxxxxxxxxxxx'], ['bl', 'xxxx1011xxxxxxxxxxxxxxxxxxxxxxxx'], ['bic', 'xxxx00x1110xxxxxxxxxxxxxxxxxxxxx'], ['bkpt', '111000010010xxxxxxxxxxxx0111xxxx'], ['blx1', '1111101xxxxxxxxxxxxxxxxxxxxxxxxx'], ['blx2', 'xxxx00010010xxxxxxxxxxxx0011xxxx'], # ... ['teq', 'xxxx00x10011xxxxxxxxxxxxxxxxxxxx'], ['tst', 'xxxx00x10001xxxxxxxxxxxxxxxxxxxx'], ]

A major goal of Pydgin was ensuring instruction semantic definitions map to ISA manual specifications as much as possible. The code below shows one such definition for the ARMv5 add instruction. A user-defined Instruction class (not shown) specifies field names that can be used to conveniently access bit positions within an instruction (e.g. rd, rn, S). Additionally, users can choose to define their own helper functions, such as the condition_passed function, to create more concise syntax that better matches the ISA manual.

def execute_add( s, inst ): if condition_passed( s, inst.cond() ): a, = s.rf[ inst.rn() ] b, _ = shifter_operand( s, inst ) result = a + b s.rf[ inst.rd() ] = trim_32( result ) if inst.S(): if inst.rd() == 15: raise FatalError('Writing SPSR not implemented!') s.N = (result >> 31)&1 s.Z = trim_32( result ) == 0 s.C = carry_from( result ) s.V = overflow_from_add( a, b, result ) if inst.rd() == 15: return s.rf[PC] = s.fetch_pc() + 4

Compared to the ARM ISA Reference manual shown below, the Pydgin instruction definition is a fairly close match. Pydgin's definitions could certainly be made more concise by using a custom DSL, however, this would lose many of the debugging benefits afforded to a well-supported language such as Python and additionally require using a custom parser that would likely need modification for each new ISA.

if ConditionPassed(cond) then

Rd = Rn + shifter_operand

if S == 1 and Rd == R15 then

if CurrentModeHasSPSR() then CPSR = SPSR

else UNPREDICTABLE else if S == 1 then

N Flag = Rd[31]

Z Flag = if Rd == 0 then 1 else 0

C Flag = CarryFrom(Rn + shifter_operand)

V Flag = OverflowFrom(Rn + shifter_operand)

Creating an ISS that can run real applications is a rather complex task, even for a bare metal simulator with no operating system such as Pydgin. Each system call in the C library must be properly implemented, and bootstrapping code must be provided to set up the program stack and architectural state. This is a very tedious and error prone process which Pydgin tries to encapsulate so that it remains as transparent to the end user as possible. In future versions of Pydgin we hope to make bootstrapping more painless and support a wider variety of C libraries.

Pydgin Performance

In order to achieve good simulation performance from Pydgin ISSs, significant work went into adding appropriate JIT annotations to the Pydgin library components. These optimization hints, which allow the JIT generated by the RPython translation toolchain to produce more efficient code, have been specifically selected for the unique properties of ISSs. For the sake of brevity, we do not talk about the exact optimizations here but a detailed discussion can be found in the ISPASS paper [1]. In the paper we evaluate two ISSs, one for a simplified MIPS ISA and another for the ARMv5 ISA, whereas below we only discuss results for the ARMv5 ISS.

The performance of Pydgin-generated ARMv5 ISSs were compared against several reference ISSs: the gem5 ARM atomic simulator (gem5), interpretive and JIT-enabled versions of SimIt-ARM (simit-nojit and simit-jit), and QEMU. Atomic models from the gem5 simulator were chosen for comparison due their wide usage amongst computer architects [g]. SimIt-ARM was selected because it is currently the highest performance ADL-generated DBT-ISS publicly available. QEMU has long been held as the gold-standard for DBT simulators due to its extremely high performance, however, QEMU is generally intended for usage as an emulator rather than a simulator [c] and therefore achieves its excellent performance at the cost of observability. Unlike QEMU, all other simulators in our study faithfully track architectural state at an instruction level rather than block level. Pydgin ISSs were generated with and without JITs using the RPython translation toolchain in order to help quantify the performance benefit of the meta-tracing JIT.

The figure below shows the performance of each ISS executing applications from the SPEC CINT2006 benchmark suite [h]. Benchmarks were run to completion on the high-performance DBT-ISSs (simit-jit, pydgin-jit, and QEMU), but were terminated after only 10 billion simulated instructions for the non-JITed interpretive ISSs (these would require many hours, in some cases days, to run to completion). Simulation performance is measured in MIPS [i] and plotted on a log scale due to the wide variance in performance. The WHMEAN group summarizes each ISS's performance across all benchmarks using the weighted harmonic mean.

A few points to take away from these results:

- ISSs without JITs (gem5, simit-nojit, and pydgin-nojit) demonstrate relatively consistent performance across applications, whereas ISSs with JITs (simit-jit, pydgin-jit, and QEMU) demonstrate much greater performance variability from application-to-application.

- The gem5 atomic model demonstrates particularly miserable performance, only 2-3 MIPS!

- QEMU lives up to its reputation as a gold-standard for simulator performance, leading the pack on nearly every benchmark and reaching speeds of 240-1120 MIPS.

- pydgin-jit is able to outperform simit-jit on four of the applications, including considerable performance improvements of 1.44–1.52× for the applications 456.hmmer, 462.libquantum, and 471.omnetpp (managing to even outperform QEMU on 471.omnetpp).

- simit-jit is able to obtain much more consistent performance (230-459 MIPS across all applications) than pydgin-jit (9.6-659 MIPS). This is due to simit-jit's page-based approach to JIT optimization compared to pydgin-jit's tracing-based approach.

- 464.h264ref displays particularly bad pathological behavior in Pydgin’s tracing JIT and is the only application to perform worse on pydgin-jit than pydgin-nojit (9.6 MIPS vs. 21 MIPS).

The pathological behavior demonstrated by 464.h264ref was of particular concern because it caused pydgin-jit to perform even worse than having no JIT at all. RPython JIT logs indicated that the reason for this performance degradation was a large number of tracing aborts due to JIT traces growing too long. However, time limitations before the publication deadline prevented us from investigating this issue thoroughly.

Since the deadline we've applied some minor bug fixes and made some small improvements in the memory representation. More importantly, we've addressed the performance degradation in 464.h264ref by increasing trace lengths for the JIT. Below we show how the performance of 464.h264ref changes as the trace_limit parameter exposed by the RPython JIT is varied from the default size of 6000 operations.

By quadrupling the trace limit we achieve an 11x performance improvement in 464.h264ref. The larger trace limit allows the JIT to optimize long code paths that were previously triggering trace aborts, greatly helping amortize the costs of tracing. Note that arbitrarily increasing this limit can potentially hurt performance if longer traces are not able to detect optimizable code sequences.

After performing similar experiments across the applications in the SPEC CINT2006 benchmark suite, we settled on a trace limit of 400,000 operations. In the figure below we show how the updated Pydgin ISS (pydgin-400K) improves performance across all benchmarks and fixes the performance degradation previously seen in 464.h264ref. Note that the non-JITted simulators have been removed for clarity, and simulation performance is now plotted on a linear scale to more clearly distinguish the performance gap between each ISS.

With these improvements, we are now able to beat simit-jit on all but two benchmarks. In future work we hope to further close the gap with QEMU as well.

Conclusions and Future Work

Pydgin demonstrates that the impressive work put into the RPython translation toolchain, designed to simplify the process of building fast dynamic-language VMs, can also be leveraged to build fast instruction set simulators. Our prototype ARMv5 ISS shows that Pydgin can generate ISSs with performance competitive to SimIt-ARM while also providing a more productive development experience: RPython allowed us to develop Pydgin with only four person-months of work. Another significant benefit of the Pydgin approach is that any performance improvements applied to the RPython translation toolchain immediately benefit Pydgin ISSs after a simple software download and retranslation. This allows Pydgin to track the continual advances in JIT technology introduced by the PyPy development team.

Pydgin is very much a work in progress. There are many features we would like to add, including:

- more concise syntax for accessing arbitrary instruction bits

- support for other C libraries such as glibc, uClibc, and musl (we currently only support binaries compiled with newlib)

- support for self-modifying code

- features for more productive debugging of target applications

- ISS descriptions for other ISAs such as RISC-V, ARMv8, and x86

- automatic generation of compilers and toolchains from Pydgin descriptions

In addition, we think there are opportunities for even greater performance improvements with more advanced techniques such as:

- automatic generation of optimized instruction decoders

- optimizations for floating-point intensive applications

- multiple tracing-JITs for parallel simulation of multicore SOCs

- a parallel JIT compilation engine as proposed by Böhm et al. [3]

We hope that Pydgin can be of use to others, so if you try it out please let us know what you think. Feel free to contact us if you find any of the above development projects interesting, or simply fork the project on GitHub and hack away!

-- Derek Lockhart and Berkin Ilbeyi

Acknowledgements

We would like to sincerely thank Carl Friedrich Bolz and Maciej Fijalkowski for their feedback on the Pydgin publication and their guidance on improving the JIT performance of our simulators. We would also like to thank for the whole PyPy team for their incredible work on the PyPy and the RPython translation toolchain. Finally, thank you to our research advisor, Prof. Christopher Batten, and the sponsors of this work which include the National Science Foundation, the Defense Advanced Research Projects Agency, and Intel Corporation.

Footnotes

| [a] | Pydgin loosely stands for [Py]thon [D]SL for [G]enerating [In]struction set simulators and is pronounced the same as “pigeon”. The name is inspired by the word “pidgin” which is a grammatically simplified form of language and captures the intent of the Pydgin embedded-ADL. https://github.com/cornell-brg/pydgin |

| [b] | Popular instruction set architectures (ISAs) include MIPs, ARM, x86, and more recently RISC-V |

| [c] | (1, 2) For a good discussion of simulators vs. emulators, please see the following post on StackOverflow: https://stackoverflow.com/questions/1584617/simulator-or-emulator-what-is-the-difference |

| [d] | https://en.wikipedia.org/wiki/Dark_silicon |

| [e] | Please see the Pydgin paper for a more detailed discussion of prior work. |

| [f] |

For more examples of Pydgin ISA specifications, please see the ISPASS paper [1] or the Pydgin source code on GitHub. Pydgin instruction definitions for a simple MIPS-inspired ISA can be found here: Pydgin instruction definitions for a simplified ARMv5 ISA can be found here: |

| [g] |

gem5 is a cycle-level simulation framework that contains both functional-level (atomic) and cycle-level processor models. Although primarily used for detailed, cycle-approximate processor simulation, gem5's atomic model is a popular tool for many ISS tasks. |

| [h] | All performance measurements were taken on an unloaded server-class machine. |

| [i] | Millions of instructions per second. |

References

| [1] |

(1, 2, 3) Derek Lockhart, Berkin Ilbeyi, and Christopher Batten. "Pydgin: Generating Fast Instruction Set Simulators from Simple Architecture Descriptions with Meta-Tracing JIT Compilers." IEEE Int'l Symp. on Performance Analysis of Systems and Software (ISPASS), Mar. 2015. |

| [2] |

Derek Lockhart, Gary Zibrat, and Christopher Batten. "PyMTL: A Unified Framework for Vertically Integrated Computer Architecture Research." 47th ACM/IEEE Int'l Symp. on Microarchitecture (MICRO-47), Dec. 2014. |

| [3] | I. Böhm, B. Franke, and N. Topham. Generalized Just-In-Time Trace Compilation Using a Parallel Task Farm in a Dynamic Binary Translator. ACM SIGPLAN Conference on Programming Language Design and Implementation (PLDI), Jun 2011. |

For reference 3: https://groups.inf.ed.ac.uk/pasta/pub_PLDI_2011.html.

Experiments in Pyrlang with RPython

Pyrlang is an Erlang BEAM bytecode interpreter written in RPython.

It implements approximately 25% of BEAM instructions. It can support integer calculations (but not bigint), closures, exception handling, some operators to atom, list and tuple, user modules, and multi-process in single core. Pyrlang is still in development.

There are some differences between BEAM and the VM of PyPy:

- BEAM is a register-based VM, whereas the VM in PyPy is stack-based.

- There is no traditional call-stack in BEAM. The Y register in BEAM is similar to a call-stack, but the Y register can sometimes store some variables.

- There are no typical language-level threads and OS-level threads in BEAM; only language-level processes, whose behavior is very similar to the actor model.

Regarding bytecode dispatch loop, Pyrlang uses a while loop to fetch instructions and operands, call the function corresponding to every instruction, and jump back to the head of the while loop. Due to the differences between the RPython call-stack and BEAM’s Y register, we decided to implement and manage the Y register by hand. On the other hand, PyPy uses RPython’s call stack to implement Python’s call stack. As a result, the function for the dispatch loop in PyPy calls itself recursively. This does not happen in Pyrlang.

The Erlang compiler (erlc) usually compiles the bytecode instructions for function invocation into CALL (for normal invocation) and CALL_ONLY (for tail recursive invocation). You can use a trampoline semantic to implement it:

- CALL instruction: The VM pushes the current instruction pointer (or called-program counter in PyPy) to the Y register, and jumps to the destination label. When encountering a RETURN instruction, the VM pops the instruction pointer from the Y register and returns to the location of the instruction pointer to continue executing the outer function.

- CALL_ONLY instruction: The VM simply jumps to the destination label, without any modification of the Y register. As a result, the tail recursive invocation never increases the Y register.

The current implementation only inserts the JIT hint of can_enter_jit following the CALL_ONLY instruction. This means that the JIT only traces the tail-recursive invocation in Erlang code, which has a very similar semantic to the loop in imperative programming languages like Python.

We have also written a single scheduler to implement the language level process in a single core. There is a runable queue in the scheduler. On each iteration, the scheduler pops one element (which is a process object with dispatch loop) from the queue, and executes the dispatch loop of the process object. In the dispatch loop, however, there is a counter-call “reduction” inside the dispatch loop. The reduction decrements during the execution of the loop, and when the reduction becomes 0, the dispatch loop terminates. Then the scheduler pushes that element into the runable queue again, and pops the next element for the queue, and so on.

We are planning to implement a multi-process scheduler for multi-core CPUs, which will require multiple schedulers and even multiple runable queues for each core, but that will be another story. :-)

Methods

We wrote two benchmark programs of Erlang:

- FACT: A benchmark to calculate the factorial in a tail-recursive style, but because we haven’t implemented big int, we do a remainder calculation to the argument for the next iteration, so the number never overflows.

- REVERSE: The benchmark creates a reversed list of numbers, such as [20000, 19999, 19998, …], and applies a bubble sort to it.

Results

The Value of Reduction

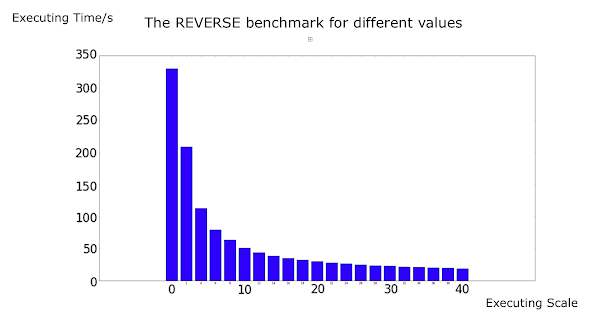

We used REVERSE to evaluate the JIT with different values of reduction:

The X axis is the value of reduction, and the Y axis is the execution time (by second).

It seems that when the value of reduction is small, the reduction influences the performance significantly, but when reduction becomes larger, it only increases the speed very slightly. In fact, we use 2000 as the default reduction value (as well as the reduction value in the official Erlang interpreter).

Surprisingly, the trace is always generated even when the reduction is very small, such as 0, which means the dispatch loop can only run for a very limited number of iterations, and the language level process executes fewer instructions than an entire loop in one switch of the scheduler). The generated trace is almost the same, regardless of different reduction values.

Actually, the RPython JIT only cares what code it meets, but does not care who executes it, thus the JIT always generates the results above. The trace even can be shared among different threads if they execute the same code.

The overhead at low reduction value may be due to the scheduler, which switches from different processes too frequently, or from the too-frequent switching between bytecode interpreter and native code, but not from JIT itself.

Here is more explanation from Armin Rigo:

“The JIT works well because you’re using a scheme where some counter is decremented (and the soft-thread interrupted when it reaches zero) only once in each app-level loop. The soft-thread switch is done by returning to some scheduler, which will resume a different soft-thread by calling it. It means the JIT can still compile each of the loops as usual, with the generated machine code containing the decrease-and-check-for-zero operation which, when true, exits the assembler."

Fair Process Switching vs. Unfair Process Switching

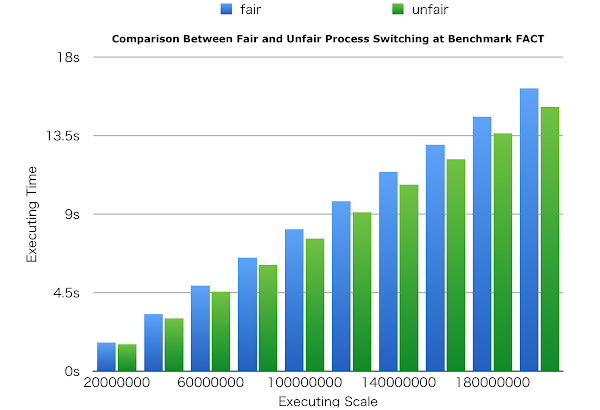

We are also concerned about the timing for decreasing reduction value. In our initial version of Pyrlang, we decrease reduction value at every local function invocation, module function invocation, and BIF (built-in function) invocation, since this is what the official Erlang interpreter does. However, since the JIT in RPython basically traces the target language loop (which is the tail recursive invocation in Pyrlang) it is typically better to keep the loop whole during a switch of the language level process. We modified Pyrlang, and made the reduction decrement only occur after CALL_ONLY, which is actually the loop boundary of the target language.

Of course, this strategy may cause an “unfair” execution among language level processes. For example, if one process has only a single long-sequence code, it executes until the end of the code. On the other hand, if a process has a very short loop, it may be executed by very limited steps then be switched out by the scheduler. However, in the real world, this “unfairness” is usually considered acceptable, and is used in many VM implementations including PyPy for improving the overall performance.

We compared these two versions of Pyrlang in the FACT benchmark. The reduction decrement is quite different because there are some BIF invocations inside the loop. In the old version the process can be suspended at loop boundaries or other function invocation, but in the new version, it can be suspended only at loop boundaries.

We show that the strategy is effective, removing around 7% of the overhead. We have also compared it in REVERSE, but since there are no extra invocations inside the trace, it cannot provide any performance improvement. In the real world, we believe there is usually more than one extra invocation inside a single loop, so this strategy is effective for most cases.

Comparison with Default Erlang and HiPE

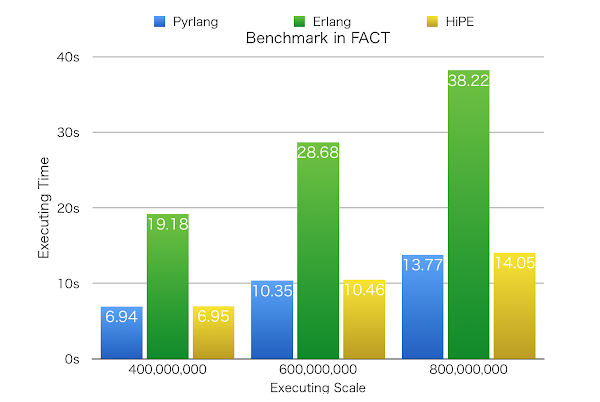

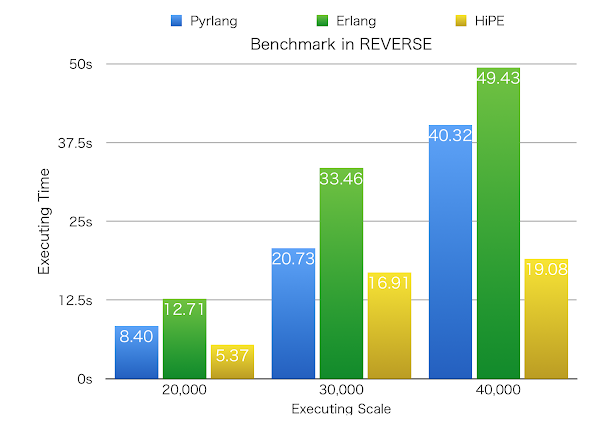

We compared the performance of Pyrlang with the default Erlang interpreter and the HiPE (High Performance Erlang) complier. HiPE is an official Erlang compiler that can compile Erlang source code to native code. The speed of Erlang programs obviously improves but loses its generality instead.

Please note that Pyrlang is still in development, so in some situations it does less work than the default Erlang interpreter, such as not checking integer overflow when dealing with big integer, and not checking and adding locks when accessing message queues in the language-level process, so is therefore faster. The final version of Pyrlang may be slower.

We used the two benchmark programs above, and made sure both of them are executed for more than five seconds to cover the JIT warm-up time for RPython. The experiment environment is a OS X 10.10 machine with 3.5GHZ 6-core Intel Xeon E5 CPU and 14GB 1866 MHz DDR3 ECC memory.

Let’s look at the result of FACT. The graph shows that Pyrlang runs 177.41% faster on average than Erlang, and runs at almost the same speed as HiPE. However, since we haven’t implemented big integer in Pyrlang, the arithmetical operators do not do any extra overflow checking. It is reasonable that the final version for Pyrlang will be slower than the current version and HiPE.

As for REVERSE, the graph shows that Pyrlang runs 45.09% faster than Erlang, but 63.45% slower than HiPE on average. We think this is reasonable because there are only few arithmetical operators in this benchmark so the speeds of these three implementations are closer. However, we observed that at the scale of 40,000, the speed of Pyrlang slowed down significantly (111.35% slower than HiPE) compared with the other two scales (56.38% and 22.63% slower than HiPE).

Until now we can only hypothesize why Pyrlang slows down at that scale. We guess that the overhead might be from GC. This is because the BEAM bytecode provides some GC hints to help the default Erlang compiler to perform some GC operations immediately. For example, using GC_BIF instead of a BIF instruction tells the VM that there may be a GC opportunity, and tells the VM how many live variables should be around one instruction. In Pyrlang we do not use these kinds of hints but rely on RPython’s GC totally. When there are a huge number of objects during runtime, (as for REVERSE, it should be the Erlang list object) the speed therefore slows down.

Ruochen Huang

linalg support in pypy/numpy

Introduction

PyPy's numpy support has matured enough that it can now support the lapack/blas libraries through the numpy.linalg module. To install the version of numpy this blog post refers to, install PyPy version 2.5.0 or newer, and run this:pypy -m pip install git+https://bitbucket.org/pypy/numpy.git

This update is a major step forward for PyPy's numpy support. Many of the basic matrix operations depend on linalg, even matplotlib requires it to display legends (a pypy-friendly version of matplotlib 1.3 is available at https://github.com/mattip/matplotlib).

A number of improvements and adaptations, some of which are in the newly-released PyPy 2.5.0, made this possible:

- Support for an extended frompyfunc(), which in the PyPy version supports much of the ufunc API (signatures, multiple dtypes) allowing creation of pure-python, jit-friendly ufuncs. An additional keyword allows choosing between out = func(in) or func(in, out) ufunc signatures. More explanation follows.

- Support for GenericUfuncs via PyPy's (slow) capi-compatibility layer. The underlying mechanism actually calls the internal implementation of frompyfunc().

- A cffi version of _umath_linalg. Since cffi uses dlopen() to call into shared objects, we added support in the numpy build system to create non-python shared libraries from source code in the numpy tree. We also rewrote parts of the c-based _umath_linalg.c.src in python, renamed numpy's umath_linalg capi module to umath_linag_capi, and use it as a shared object through cffi.

Status

We have not completely implemented all the linalg features. dtype resolution via casting is missing, especially for complex ndarrays, which leads to slight numerical errors where numpy uses a more precise type for intermediate calculations. Other missing features in PyPy's numpy support may have implications for complete linalg support.Some OSX users have noticed they need to update pip to version 6.0.8 to overcome a regression in pip, and it is not clear if we support all combinations of blas/lapack implementations on all platforms.

Over the next few weeks we will be ironing out these issues.

Performance

A simple benchmark is shown below, but let's state the obvious: PyPy's JIT and the iterators built into PyPy's ndarray implementation will in most cases be no faster than CPython's numpy. The JIT can help where there is a mixture of python and numpy-array code. We do have plans to implement lazy evaluation and to further optimize PyPy's support for numeric python, but numpy is quite good at what it does.HowTo for PyPy's extended frompyfunc

The magic enabling blas support is a rewrite of the _umath_linalg c-based module as a cffi-python module that creates ufuncs via frompyfunc. We extended the numpy frompyfunc to allow it to function as a replacement for the generic ufunc available in numpy only through the c-api.We start with the basic frompyfunc, which wraps a python function into a ufunc:

def times2(in0):

return in0 * 2

ufunc = frompyfunc(times2, 1, 1)

In cpython's numpy the dtype of the result is always object, which is not implemented (yet) in PyPy, so this example will fail. While the utility of object dtypes can be debated, in the meantime we add a non-numpy-compatible keyword argument dtypes to frompyfunc. If dtype=['match'] the output dtype will match the dtype of the first input ndarray:

ufunc = frompyfunc(times2, 1, 1, dtype=['match']) ai = arange(24).reshape(3, 4, 2) ao = ufunc(ai) assert (ao == ai * 2).all()

I hear you ask "why is the dtypes keyword argument a list?" This is so we can support the Generalized Universal Function API, which allows specifying a number of specialized functions and the input-output dtypes each specialized function accepts.

Note that the function feeds the values of ai one at a time, the function operates on scalar values. To support more complicated ufunc calls, the generalized ufunc API allows defining a signature, which specifies the layout of the ndarray inputs and outputs. So we extended frompyfunc with a signature keyword as well.

We add one further extension to frompyfunc: we allow a Boolean keyword stack_inputs to specify the argument layout of the function itself. If the function is of the form:

out0, out1, ... = func(in0, in1,...)

then stack_inputs is False. If it is True the function is of the form:

func(in0, in1, ... out0, out1, ...)

Here is a complete example of using frompyfunc to create a ufunc, based on this link:

def times2(in_array, out_array):

in_flat = in_array.flat

out_flat = out_array.flat

for i in range(in_array.size):

out_flat[i] = in_flat[i] * 2

ufunc = frompyfunc([times2, times2], 1, 1,

signature='(i)->(i)',

dtypes=[dtype(int), dtype(int),

dtype(float), dtype(float),

],

stack_inputs=True,

)

ai = arange(10, dtype=int)

ai2 = ufunc(ai)

assert all(ai2 == ai * 2)

Using this extended syntax, we rewrote the lapack calls into the blas functions in pure python, no c needed. Benchmarking this approach actually was much slower than using the upstream umath_linalg module via cpyext, as can be seen in the following benchmarks. This is due to the need to copy c-aligned data into Fortran-aligned format. Our __getitem__ and __setitem__ iterators are not as fast as pointer arithmetic in C. So we next tried a hybrid approach: compile and use numpy's umath_linalg python module as a shared object, and call the optimized specific wrapper function from it.

Benchmarks

Here are some benchmarks, running a tight loop of the different versions of linalg.inv(a), where a is a 10x10 double ndarray. The benchmark ran on an i7 processor running ubuntu 14.04 64 bit:| Impl. | Time after warmup |

|---|---|

| CPython 2.7 + numpy 1.10.dev + lapack | 8.9 msec/1000 loops |

| PyPy 2.5.0 + numpy + lapack via cpyext | 8.6 msec/1000 loops |

| PyPy 2.5.0 + numpy + lapack via pure python + cffi | 19.9 msec/1000 loops |

| PyPy 2.5.0 + numpy + lapack via python + c + cffi | 9.5 msec/1000 loops |

While no general conclusions may be drawn from a single micro-benchmark, it does indicate that there is some merit in the approach taken.

Conclusion

PyPy's numpy now includes a working linalg module. There are still some rough corners, but hopefully we have implemented the parts you need. While the speed of the isolated linalg function is no faster than CPython and upstream numpy, it should not be significantly slower either. Your use case may see an improvement if you use a mix of python and lapack, which is the usual case.Please let us know how it goes. We love to hear success stories too.

We still have challenges at all levels of programming,and are always looking for people willing to contribute, so stop by on IRC at #pypy.

mattip and the PyPy Team

Interesting work although benchmarking linear algebra routines on 10x10 arrays feels wrong: typical linear algebra applications use hundreds or thousands of dimensions. Would you mind re-rerunning those benchmarks on 1000x1000 arrays instead? The use of the CPU cache and multiple threads can be very impacting for such workloads.

Also some numpy / scipy developers are working on supporting OpenBLAS as the default BLAS/LAPACK by default for the Windows wheel packages and maybe later for the OSX packages as well.

Under Linux (Debian / Ubuntu) it's pretty easy to have libblas.so / liblapack.so be symlinks to either ATLAS or OpenBLAS using the update-alternative syste,

What blog post somehow fails to mention is that we do not reimplement those but reuse whatever underlaying library is there. The measurements of the actual speed is then not that interesting, because we're only interested in the overhead of call.

It might still be interesting to run that kind of benchmarks on more realistic workloads (maybe in addition to some micro-workloads) to see the importance of the remaining overhead in a typical usage scenario.

The most interesting benchmark is probably the one only you can run, i.e. how does pypy perform for you on your workload.

As far as lapack vs openblas, we will try to imitate what numpy does. If cpython/numpy supports a variation of lapack and pypy/numpy doesn't, that should be considered a bug.

Please let us know how it works for you.

> The most interesting benchmark is probably the one only you can run, i.e. how does pypy perform for you on your workload.

I agree, but inverting a 10x10 matrix is probably not representative of anybody's workload.

While it's important not to introduce too much overhead in the bindings, I think it's also good to keep in mind that an overhead of the order of the micro-second is completely negligible compared to the execution time of a typical linear algebra operation running on realistically sized data. Hence my original remark.

> As far as lapack vs openblas, we will try to imitate what numpy does. If cpython/numpy supports a variation of lapack and pypy/numpy doesn't, that should be considered a bug.

Just to clarify OpenBLAS is an implementation of the standard BLAS API that also includes the official LAPACK implementation from netlib linked against its own optimized BLAS routines. The 2 main open source optimized implementations of BLAS/LAPACK supported by numpy & scipy are ATLAS and OpenBLAS.

> While it's important not to introduce too much overhead in the bindings, I think it's also good to keep in mind that an overhead of the order of the micro-second is completely negligible compared to the execution time of a typical linear algebra operation running on realistically sized data. Hence my original remark.

But then you're just benchmarking the underlying library, which is the exact same library as numpy.

> But then you're just benchmarking the underlying library, which is the exact same library as numpy.

Yes I agree. I just want to highlight that for most common real life use cases, a small performance overhead in those those LAPACK bindings are almost never a problem.

Otherwise your readers might be mislead into thinking that the "PyPy 2.5.0 + numpy + lapack via pure python + cffi" version is significantly suboptimal (2x slowdown!) while in practice a couple of additional microseconds might be completely undetectable compared to the actual execution time of the "inv" function that typically lasts more than a millisecond on anything that is non-toy data.

Additional data point: repeatedly inverting a ~10x10 matrix is exactly what I need performance on - for running an extended Kalman Filter. : )

> Additional data point: repeatedly inverting a ~10x10 matrix is exactly what I need performance on - for running an extended Kalman Filter. : )

Fair enough: so there actually exists a use case for that benchmark. Optimizing the bindings overhead might thus be worthy in the end.

I love hearing about the progress and wish I could test on my benchmarks. Any chance of windows support?

Yaacov what is missing for you to try it?

Here is the way I verify that the code works on windows 7 64 bit and windows 8.1:

download and install compiler

https://www.microsoft.com/en-us/download/details.aspx?id=44266

download pypy and open the zip

https://bitbucket.org/pypy/pypy/downloads/pypy-2.5.0-win32.zip

install pip into pypy

https://bootstrap.pypa.io/get-pip.py

install numpy into pypy

pip install git+https://bitbucket.org/pypy/numpy.git

I get a tracback ending in

\appdata\local\temp\pip-wdyqtr-build\numpy\distutils\mi

sc_util.py", line 872, in _get_configuration_from_setup_py

config = setup_module.configuration(*args)

File "numpy\linalg\setup.py", line 85, in configuration

library_dirs = [sys.real_prefix + '/include',

AttributeError: 'module' object has no attribute 'real_prefix'

in a warning "using unoptimized lapack"

I still get non-existing conjugate method error when using, e.g., linalg.pinv. Any plan on getting this working?

I got an error message:

OSError: Cannot load library /usr/local/Cellar/pypy/2.5.0/libexec/site-packages/numpy/linalg/libumath_linalg_cffi.so: dlopen(/usr/local/Cellar/pypy/2.5.0/libexec/site-packages/numpy/linalg/libumath_linalg_cffi.so, 2): image not found

Is there anything I am not doing right for the installation? I have pypy 2.5, and Mac OS 10.10.

Are you installing via pip, if so we have had reports of older versions of pip failing. You should have pip 6.0.8 or later. See https://bitbucket.org/pypy/numpy/issue/21

Same problem here. (OSX 10.10) I've got the newest pip (6.0.8) and setuptools (14.0.3) version installed.

Same problem here. (OSX 10.10) I've got the newest pip (6.0.8) and setuptools (14.0.3) version installed.

I can't reproduce this as I do not have a MacOS machine. The place to follow this up is on our issue tracker, https://bitbucket.org/pypy/numpy/issue/21

It would be most helpful to attach a full log from "pip install" and 'pypy -c "import numpy"' to that issue

One way pypy might be able to outperform numpy is by eliminating temporaries.

Just converting the BLAS functions to chain operations efficiently and sometimes update in-place rather than allocating and de-allocating arrays should help a lot.

This is great! But I can't use this for almost any of my code before np.einsum is supported :/ IMO, it is a super useful function for almost anything. Any plans for supporting it?

NumPyPy status - January 2015

Hi Everyone

Here is what has been done in January thanks to the funding of NumPyPy, I would like to thank all the donors and tell you that you can still donate :

- I have focused on implementing the object dtype this month, it is now possible to store objects inside ndarrays using the object dtype

- It is also possible to add an object ndarray to any other ndarray (implementing other operators is trivial)

- Implementing the missing operations for object arrays

- Implementing garbage collection support for object arrays (currently, storing an object inside an ndarray doesn't keep the object alive)

- Packaging NumPyPy on PyPI

Thanks for the post! This sounds pretty cool.

The previous post suggested that there would be an update in regards to linalg. Does this mean linalg is working? Is having a working linalg what stands in the way of a working matplotlib? Thanks for answering what might be a naive question!

Linalg is basically usable with the usual caveats: use PyPy 2.5.0 or later, use pypy/numpy from the bitbucket repo, you can even use matplotlib from my fork at https://github.com/mattip/matplotlib but there is no gui backend available yet, so you can only save the plots to files. Watch this space for the promised blog post, hopefully next week.

Great to hear there is some progress on numpy!

About matplotlib @mattip. Maybe a GSoC project for the GUI?

Regarding matplotlib, I whipped up a quick hack that can do at least very simple matplotlib stuff. Based on running a "slave" CPython using RpyC, as I recall was already done in 2011 or so demos.

Simple stuff can run unmodified, although can be of course slow if there's a lot or frequent data passing from PyPy to CPython.

Could be probably quite easily done in other direction to, ie running PyPy from CPython.

https://github.com/jampekka/cpyproxy

PyPy 2.5.0 released

PyPy 2.5.0 - Pincushion Protea

We’re pleased to announce PyPy 2.5, which contains significant performance enhancements and bug fixes.You can download the PyPy 2.5.0 release here:

We would like to thank our donors for the continued support of the PyPy project, and for those who donate to our three sub-projects, as well as our volunteers and contributors (10 new commiters joined PyPy since the last release). We’ve shown quite a bit of progress, but we’re slowly running out of funds. Please consider donating more, or even better convince your employer to donate, so we can finish those projects! The three sub-projects are:

-

- Py3k (supporting Python 3.x): We have released a Python 3.2.5 compatible version

-

we call PyPy3 2.4.0, and are working toward a Python 3.3 compatible version

-

STM (software transactional memory): We have released a first working version, and continue to try out new promising paths of achieving a fast multithreaded Python

-

NumPy which requires installation of our fork of upstream numpy, available on bitbucket

What is PyPy?

PyPy is a very compliant Python interpreter, almost a drop-in replacement for CPython 2.7. It’s fast (pypy and cpython 2.7.x performance comparison) due to its integrated tracing JIT compiler.This release supports x86 machines on most common operating systems (Linux 32/64, Mac OS X 64, Windows, and OpenBSD), as well as newer ARM hardware (ARMv6 or ARMv7, with VFPv3) running Linux.

While we support 32 bit python on Windows, work on the native Windows 64 bit python is still stalling, we would welcome a volunteer to handle that.

Highlights

- The past months have seen pypy mature and grow, as rpython becomes the goto solution for writing fast dynamic language interpreters. Our separation of rpython and the python interpreter PyPy is now much clearer in the PyPy documentation and we now have separate RPython documentation.

- We have improved warmup time as well as jitted code performance: more than 10% compared to pypy-2.4.0. We no longer zero-out memory allocated in the gc nursery by default, work that was started during a GSoC.

- Passing objects between C and PyPy has been improved. We are now able to pass raw pointers to C (without copying) using pinning. This improves I/O; benchmarks that use networking intensively improved by about 50%. File() operations still need some refactoring but are already showing a 20% improvement on our benchmarks. Let us know if you see similar improvements.

- Our integrated numpy support gained much of the GenericUfunc api in order to support the lapack/blas linalg module of numpy. This dovetails with work in the pypy/numpy repository to support linalg both through the (slower) cpyext capi interface and also via (the faster) pure python cffi interface, using an extended frompyfunc() api. We will soon post a seperate blog post specifically about linalg and PyPy.

- Dictionaries are now ordered by default, see the blog post

- Our nightly translations use –shared by default, including on OS/X and linux

- We now more carefully handle errno (and GetLastError, WSAGetLastError) tying the handlers as close as possible to the external function call, in non-jitted as well as jitted code.

- Issues reported with our previous release were resolved after reports from users on our issue tracker at https://foss.heptapod.net/pypy/pypy/-/issues or on IRC at #pypy.

Please try it out and let us know what you think. We especially welcome success stories, we know you are using PyPy, please tell us about it!

Cheers

The PyPy Team

PyPy 2.6.0 - Cameo Charm since PyPy looks best in profile (well, vmprof anyway)

How is matplotlib state in numpypy ?

No GUI backend, but this fork should work (version 1.4) for non-interactive plotting

https://github.com/mattip/matplotlib

You will need to install our fork of numpy as a prerequisite

https://bitbucket.org/pypy/numpy

Help with the cffi port of WxPython could get us a GUI backend (or a updated matplotlib)

https://doc.pypy.org/en/latest/project-ideas.html#make-more-python-modules-pypy-friendly

Thanks for the information