VM summit: nice to see friendly competition

So Google has launched the unladen swallow project with this first goal:

Produce a version of Python at least 5x faster than CPython.

We discussed some details with Collin Winter, Jeffrey Yasskin and Thomas Wouters during the VM summit yesterday. We were a bit confused about usage of the term JIT, because as far as we understood, it's going to be upfront compilation into LLVM. In the past we have looked into LLVM – at one point PyPy extensively use it but it wasn't clear how we could make good use to it. They also consider changing to something else than LLVM. It's gonna be interesting to see how this works out.

It's good to see friendly competition, and we think that should take up the challenge and see if we can produce faster pickling, run 2to3 and Django faster than what they can come up with. We also talked to IronPython and Jython developers and all agreed that some common benchmarks would be good. And maybe do weekly press releases about small speed increases? :)

The idea of the VM summit here in Chicago was to bring together implementors of various virtual machine languages. There were members of the communities of IronPython, CPython, GemStone's MagLev, Rubinius, Mozilla's TraceMonkey, Parrot, Sun's Da Vinci Machine, Microsoft's DLR, Jython and JRuby. Everybody got to talk 5-10 minutes on their current status and challenges. It is clear that you cannot begin to cover the complexities and architectures of the involved projects. But that wasn't too much of a problem because the rest of the day everybody freely and dynamically grouped on their issues of choice. We established some more personal contacts, was great to chat with people like Andreas Gal from the University of California, Irvine, who have a very similar idea about the JIT that we have. Actually, we could probably haved mixed our two presentations and nobody would have actually noticed :-).

At the end of the presentation part, John Rose presented his slides. John is a Hotspot developer, and while not precisely a dynamic language implementor, he has a lot of experience in virtual machine implementation. It's very good to see the JVM being extended towards supporting dynamic-language specific things, in order to be something more than just a good platform for Java. We'll probably have some extra meetup with him the next days.

cheers,holger and fijal

PyPy talk at OpenBossa 09

Yesterday i gave my PyPy status/mobile perspectives at OpenBossa, Nokia's developer conference for embedded platforms in Brazil. Found it a bit of a tough task to do that in 50 minutes. I had some 50, later more developers attending the talk and was happy with the questions and the feedback. Guess it's a good sign if the number of people grows during a talk :) It was the first time i tried to work more with pictures and actually used some devianart photos from Marikaz to mark section transitions. I summarize/highlight some key points here in the post.

After intro and 2.5 compatibility status, i talked about our measurements of PyPy's Python on Nokia's N810 internet tablet. The best bit is that for almost all Python data structures PyPy has smaller memory representations than CPython. Particularly good are class instances which often score at 50% of CPython's sizes. Startup time is also often better and can be improved. On the bad side, PyPy's quite large base interpreter size and its bytecode execution is often worse. In the talk i also outline ideas for "perfect PYC files" for minimizing module import times and maximizing sharing across interpreter processes. I also briefly discussed the PyPy situation with extension modules and regarding C++ libs. Most of these ideas arose from sprint discussions last year. In the morning i also had some good talk with Stefan Seefeld about Boost Python and the new QT4 bindings. Maybe to use Boost Python is also a good opportunity - but PyPy does not currently have a C-level or C++ level API.

In subsequent lunch discussions people agreed that PyPy has three main interesting areas currently:

- the Python Just-In-Time Compiler

- a virtualized, sandboxed Python interpreter

- an efficient Python interpreter for small devices

I think our upcoming 1.1 release will be a good point in time for many people to look some more into PyPy. I hope we are crossing the chasm soon. It's been a while since the project started :) Getting some more sponsoring to sustain and increase our current efforts probably wouldn't hurt.

Now i am off to spend my last day in Recife / Brazil, fly back to Germany in the evening and then spend time on preparing for Pycon 2009. And I guess i am going to enjoy some naturally cold air - at least my two jogging sessions at Brazillian beaches, at a sustained 30 degrees celsius, were tough. I guess i shouldn't complain, though :)

Was great meeting all the brazillian guys and the few women - just had breakfeast with Kate Alhola, kernel hacker and working on the new "Freemantle" graphical platform. Many thanks go to Marcio Marcedo and the Python team at INDT who invited me here. Hope to come again next year and eventually talk more about the Zone VM :)

If you are interested in some more not so pypy-specific bits about the conference and what i experienced, you might head over to my tetamap blog.

holger

Hi Holger,

About start up times: We have researched them a lot when developing few applications on Nokia's PyS60.

Our conclusion, for now, is that its imports and module body bytecode execution (loading modules, classes, creating functions) which takes the most of the time during the start up. Unfortunately there is no real way to speed up this process, except lazily trying to load all your code.

We have experienced with unexec() like solution. Unexec() was an old Emacs trick where a.out binary code and data segments are dumped to disk. When the application is loaded for the next time, this dump is just blitted to memory and execution continues. Kind of application level hibernation. You wouldn't actually need to distribute .pyc files at all for embedded devices, you could just give out a target specific binary dump containing ready memory layout.

Of course, it is not so straightforward on modern system with DLLs and other funny pointers. We got some tests working with CPython and PyS60 emulator - but there would be tons of work to make it actually usable (patching all system libs and DLL loads to be suspend friendly).

Some discussion here:

https://mail.python.org/pipermail/python-dev/2008-December/084466.html

Please reply at mikko (at) redinnovation (dot) com if you are interested in to hear more.

On too small edit boxes: I use: It's All Text! for Firefox, together with Vim.

- Paddy.

Hi Mikko!

thanks a lot for your comment and the pointer to the python-dev thread!

Like Martin von Loewis i'd be very interested to know more numbers regarding how the time for python imports is usually spent - i'd suspect the major bit comes from unmarshalling and the involved malloc/copying of data work. If that is true then what i presented in the talk as "perfect pyc files" is probably a good idea. It's basically what Martin suggested.

I find the unexec ideas interesting, especially on platforms where fork does not exist. PyPy could probably have a very compact interpreter state representation if we perform garbage collection before writing to disk. When using moving GCs those objects would map very compactly into the oldest-generation memory and thus be mostly avoided by subsequent GC collects.

Of course, there also is time consumed for linking DLLs - only forking is efficient in avoiding this overhead. But it doesn't exist on Symbian, right?

If you have any more info on the exact numbers on import times, i'd be very curious. We might also have some numbers from PyPy - need to check.

I am also available on holger.krekel at gmail com. You are also very welcome to post to pypy-dev (https://codespeak.net/mailman/listinfo/pypy-dev)

cheers,

holger

anoymous: pypy does not have qt4 bindings yet.

paddy318: thanks! i'll check this out once i get firefox upgrade on the ubuntu machine i am currently using. (why are we in 2009 still having this concept of "installing" apps/plugins, let alone finding a matching one?)

I was referring to this:

"In the morning i also had some good talk with Stefan Seefeld about Boost Python and the new QT4 bindings."

From that it sounds like there are new Qt4 bindings for CPython somewhere, using Boost. I have tried searching, but was not able to find anything.

Anonymous, i also only found the 2005 announcement. I mailed Stefan to find out some more. Maybe it's just existing in some developers repository as of yet. I'll let you know if i find out something more actual.

Anonymous: ok, seems like recent bindings use SIP, see https://www.riverbankcomputing.co.uk/software/pyqt/download

not sure about the status of boost cpython based qt bindings.

holger

Good news everyone!

A quick update from the JIT front. As of yesterday, we're now able to translate a highly-experimental Python interpreter that contains JIT. It mostly crashes immediately, mostly due to some unsupported operations in the assembler backend, but for a carefully crafted program, we're able to get massive speedups. For something as complex as:

i = 0 while i < 10000000: i = i + 1

our JIT is about 20x faster than CPython. That's still about 3x slower than Psyco, but looking at assembler code it's obvious that we can speed it up a lot. These are very good news, since we don't encode python semantics at all in the JIT. The JIT is automatically generated from the Python interpreter source code. This means we should be able to expand it to handle more complex python programs relatively quickly (interested assembler experts needed!).

This is actually the fifth incarnation of JIT that happened over the last two years. It's by far simpler and more promising than any of the previous approaches. Expect more details soon!

Cheers,fijal

This is exciting. The world is waiting.

(I am still sad that Psyco development was discontinued and never ported to 64bit)

Congratulations! Very nice to read about these milestones.

I did not follow llvm development but does anyone know if they made some arrangements by now that would enable the PyPy JIT generator to leverage their optimizers?

I'm wondering why something like this would be faster than CPython? New to the whole python scene so I'm really just curios.

nice one :)

In the mean time... I wrote an optimized version of that program for CPython:

i = 10000000

CPython is 10000000x faster than the pypy jit!!!!!!

Congratulations!

This is very exciting.

@Anonymous - it's because the standard python interpreter doesn't use a JIT, which makes dynamic languages quite slow.

JIT - a bit of look inside

The previous post about our JIT explained a bit from the 1000 km perspective how the tracing JIT would approach a language like Python.

I would like to step a bit inside and give a zoom to some of its features that are already working. While probably not the most innovative, I think it's very nice to look at the way we work with the JIT and what tools we use.

The main cool thing is that you can work on and try the JIT (including trying it on the Python interpreter!) without even generating a single bit of assembler. How? Let's start with something very simple. Let's take a simple interpreter for language X.

Language X has 3 opcodes: CO_INCREASE, CO_DECREASE and CO_JUMP_BACK_3. CO_INCREASE increase the accumulator by one, CO_DECREASE decrease it by one, CO_JUMP_BACK_3 jump 3 opcodes back, if the accumulator is smaller than 100 (this is only to maintain some halting conditions possible). The interpreter for language X looks like this::

jitdriver = JitDriver(greens = ['i'], reds = ['res', 'a'])

code = [CO_INCREASE, CO_INCREASE, CO_INCREASE,

CO_JUMP_BACK_3, CO_INCREASE, CO_DECREASE]

def add(res, a):

return res + a

def sub(res, a):

return res - a

def main_interpreter_loop(a):

i = 0

res = 0

c = len(code)

while i < c:

jitdriver.jit_merge_point(res=res, i=i, a=a)

elem = code[i]

if elem == CO_INCREASE:

res = add(res, a)

elif elem == CO_DECREASE:

res = sub(res, a)

else:

if res > 100:

pass

else:

i = i - 3

jitdriver.can_enter_jit(res=res, i=i, a=a)

continue

i = i + 1

return res

All very simple code, expect the jitdriver hints, which instruct JIT how to behave (they are the equivalent of the ``add_to_position_key`` of last the blog post).

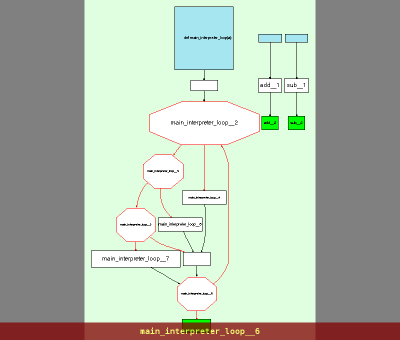

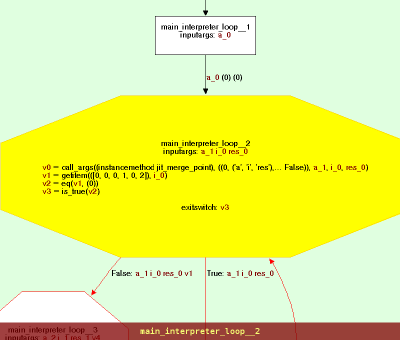

Let's look how this code is processed. This will also give a glance at how we work in this code. This particular piece can be found on a branch in pypy/jit/metainterp/test/test_loop.py and can be run with ./test_all.py jit/metainterp/test/test_loop.py -k test_example -s --view from pypy directory. The -s option lets you see the debugging output, while --view will show you some graphs. So, let's look at graphs in order:

And the same picture with a bit of zoom for the first block:

And the same picture with a bit of zoom for the first block:

This is the call graph of an interpreter loop, nothing magic so far. This is an intermediate representation of translation toolchain input. If you look around you can follow how the opcodes are dispatched (with a chain of ifs) and helpers called. Next graph is very boring, because it's a bit lower level representation of the same thing (you exit with q or escape btw :).

When we exit the graph viewer, we can see the trace generated by interpreting this graph with a given bytecode (variable code in paste above). It's something like:

[compiler] ENTER

[runner:cpu] call__4 [(''), * GCREF hidden, 0] -> 0

[runner:cpu] int_eq [0, 0] -> True

[runner:cpu] int_add [9, 1] -> 10

[runner:cpu] int_add [0, 1] -> 1

[runner:cpu] int_lt [1, 6] -> True

[runner:cpu] call__4 [(''), * GCREF hidden, 1] -> 0

[runner:cpu] int_eq [0, 0] -> True

[runner:cpu] int_add [10, 1] -> 11

[runner:cpu] int_add [1, 1] -> 2

[runner:cpu] int_lt [2, 6] -> True

[runner:cpu] call__4 [(''), * GCREF hidden, 2] -> 0

[runner:cpu] int_eq [0, 0] -> True

[runner:cpu] int_add [11, 1] -> 12

[runner:cpu] int_add [2, 1] -> 3

[runner:cpu] int_lt [3, 6] -> True

[runner:cpu] call__4 [(''), * GCREF hidden, 3] -> 1

[runner:cpu] int_eq [1, 0] -> False

[runner:cpu] int_eq [1, 2] -> False

[runner:cpu] int_gt [12, 100] -> False

[runner:cpu] int_sub [3, 3] -> 0

[compiler] LEAVE

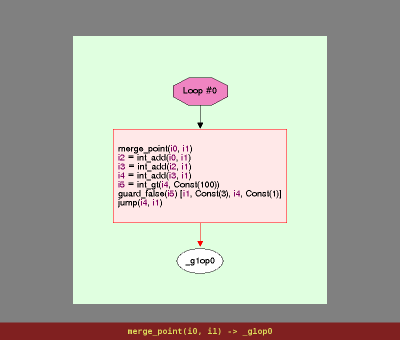

It's entering JIT, doing some primitive operations for bytecode dispatching and repeating the loop. Note that at the end of the interpreted loop (not to be confused with the interpreter loop), we see int_sub [3, 3] which resets the bytecode position to the beginning. At this time JIT (instructed by can_enter_jit hint) notices that all green variables are the same (here only i), hence we can compile the efficient loop from this point.

The loop contains 3 additions and a check (for i < 100), exactly the same as our interpreted program would do, but completely without interpretation overhead!

As you might have noticed, there is no assembler involved so far. All of this instruction execution is done directly, in pure python. In fact, the code for executing instructions is located in jit/backend/llgraph which directly interprets instructions. This is by far simpler (and easier to debug) than x86 assembler.

And this is basically it: the very simple interpreter and a jit for it. Of course we actually can generate assembler for that. Also the missing piece is optimizing the generated graphs. While for this example, by removing the interpretetation overhead, we're done, with more complex examples it's important to further optimize traces. Hopefully this and how we actually generate assembler will be topics for next blog posts.

Cheers,fijal

PyPy on Mobiles, at OpenBossa

Next week i am going to give a talk on PyPy at OpenBossa, a developer conference on embedded platforms. I've written up a bit more of my background and why i find it very interesting to go there on my blog. Probably will mostly follow up there or on twitter and not much here on the PyPy blog because it's not all about PyPy. To summarize how i see it: i think there is great potential for Python and PyPy on mobiles and am thrilled to hear about what's going on currently and to discuss opportunities.

cheers, holgerApplying a Tracing JIT to an Interpreter

After I had failed once more to explain to someone on IRC what the idea behind the current JIT generator work of PyPy, I decided to just write a blog post to explain it. Here it is :-). The post turned out to be a bit long, so please bear with me.

The goal of the post is to give an understanding of how PyPy's JIT generator is going to work. To do this, I will look at what happens when you write an interpreter in Java and apply a completely normal tracing JIT to it (for this reason all the code examples will be in some sort of pseudo-Java). The resulting generated machine code is bad, so I will explain a way to fix the occurring problem.

The techniques I describe here are conceptually similar to what we are doing in PyPy. The details (as usual) are different. The reasons why I am trying to explain things in this way is that I can start from tracing JITs, which are a known existing technique.

To understand the following, it is helpful to already know a bit how a normal tracing JIT works. I will give a reminder of how it is working, but there also exist a couple of more thorough introductions on the web already. I also will leave out a lot of details about the more detailed workings of tracing JITs and only explain the things that are relevant to what I am trying to get to here.

Tracing JITs

Tracing JITs are an idea explored by the Dynamo project in the context of dynamic optimization of machine code at runtime. The techniques were then successfully applied to Java VMs and are now being used by Mozilla's TraceMonkey JavaScript VM. They are built on some basic assumptions:

- programs spend most of their runtime in loops

- several iterations of the same loop are likely to take similar code paths

- the best way to gain information about the behaviour of a program is to observe it

The basic approach of a tracing JIT is to only generate machine code for commonly executed loops and to interpret the rest of the program. The code for those common loops however should be highly optimized, including aggressive inlining.

The generation of loops works as follows: At first, everything is interpreted. The interpreter does a bit of lightweight profiling to figure out which loops are run often. When a common loop is identified, the interpreter enters a special mode (called tracing mode). When in tracing mode, the interpreter records a history (the trace) of all the operations it executes, in addition to actually performing the operations. During tracing, the trace is repeatedly checked whether the interpreter is at a position in the program that it had seen earlier in the trace. If this happens, the trace recorded corresponds to a loop in the program that the tracing interpreter is running. At this point, this loop is turned into machine code by taking the trace and making machine code versions of all the operations in it.

This process assumes that the path through the loop that was traced is a "typical" example of possible paths (which is statistically likely). Of course it is possible that later another path through the loop is taken, therefore the machine code will contain guards, which check that the path is still the same. If during execution of the machine code a guard fails, the machine code is left and execution falls back to using interpretation (there are more complex mechanisms in place to still produce more code for the cases of guard failures, but they are of no importance for this post).

It is important to understand when the tracer considers a loop in the trace to be closed. This happens when the position key is the same as at an earlier point. The position key describes the position of the execution of the program, e.g. usually contains things like the function currently being executed and the program counter position of the tracing interpreter.

Let's look at a small example. Take the following code:

int sum_1_to_n(int n) {

int result = 0;

while (n >= 0) {

result += n;

n -= 1;

}

return result;

}

The tracing JIT will at one point trace the execution of the while loop in sum_1_to_n. The trace might look as follows:

guard_true(n >= 0); result += n; n -= 1; <loop_back>

This trace will then be turned into machine code. Note that the machine code loop is by itself infinite and can only be left via a guard failure.

A slightly more complex example:

int f(int a, int b) {

if (b % 46 == 41)

return a - b;

else

return a + b;

}

int strange_sum(int n) {

int result = 0;

while (n >= 0) {

result = f(result, n);

n -= 1;

}

return result;

}

The trace of the loop in strange_sum would maybe look like this:

guard_true(n >= 0); a = result; b = n; guard_false(b % 46 == 41); result = a + b; n -= 1; <loop_back>

This would then be turned into machine code. Note how f was inlined into the loop and how the common else case was turned into machine code, while the other one is implemented via a guard failure.

Applying a Tracing JIT to an Interpreter

In the rest of the post we will explore what happens when the program that is being executed/compiled by the tracing JIT is itself a (bytecode) interpreter for another language.

A stylized bytecode interpreter for a simple programming language could look as follows:

W_Object interpret(String bytecode, ...) {

Stack<W_Object> stack = new Stack<W_Object>();

int pc = 0;

while (true) { // bytecode dispatch loop

char instruction = bytecode.charAt(pc);

pc += 1;

switch (instruction) {

case ADD:

W_Object arg2 = stack.pop();

W_Object arg1 = stack.pop();

stack.push(do_addition(arg1, arg2));

break;

case SUB:

W_Object arg2 = stack.pop();

W_Object arg1 = stack.pop();

stack.push(do_substraction(arg1, arg2));

break;

case RETURN:

return stack.pop();

case JUMP_BACKWARD:

pc -= (int)bytecode.charAt(pc);

break;

case LOAD_INTEGER:

int value = (int)bytecode.charAt(pc);

pc += 1;

stack.push(new W_Integer(value));

break;

case PRINT:

do_print(stack.pop());

break;

case DUP:

stack.push(stack.peek());

break;

case JUMP_IF_TRUE:

...

...

}

}

If we apply a tracing JIT to this function, it will trace and compile the execution of one bytecode, because after one bytecode the bytecode dispatch loop is closed. E.g. it might trace and produce machine code for the execution of a SUB. (Sidenote: this interpret function is an example where one of the assumptions of a tracing JIT break down: two iterations of the bytecode dispatch loop are rarely going to follow the same code path, because usually two consecutive bytecodes encode different instructions).

The important bit to remember here is that the tracing JIT will produce a machine code loop that corresponds to the bytecode dispatch loop in the interpret function. Let's see how we can change that.

Improving the Generated Code

If we want to make use of the fact that the program that is being jitted is itself an interpreter, we need to change the tracing JIT a bit. To be more precise we add a way for the user of the tracing JIT to add information to the position key that the tracing JIT uses to decide when a loop is closed. This is done by a call to a magic function add_to_position_key. This allows the program writer to influence the tracing JIT's behaviour.

The semantics of add_to_position_key is as follows: The method itself does not do anything. It has an effect only when it is seen during tracing. If it is seen during tracing, the tracer adds the argument of the call to the position key that the tracer is using to find out whether a loop was closed or not.

In the example of the interpret function above, we would add a call to this function into the while loop as follows:

W_Object interpret(String bytecode, ...) {

Stack stack = new Stack();

int pc = 0;

while (true) { // bytecode dispatch loop

add_to_position_key(pc);

add_to_position_key(bytecode);

char instruction = bytecode.charAt(pc);

pc += 1;

switch (instruction) {

case ADD:

...

When the modified tracing JIT traces now the interpret function executing a SUB, something interesting happens. When the bytecode loop is closed, the modified tracing JIT does not consider the trace to be a loop, because the value of pc has been increased by one, so the position key differs. Instead it continues to trace, effectively unrolling the bytecode dispatch loop of interpret.

The only way for a loop to be considered closed is if the pc variable has the same value a second time. This can only happen after a JUMP_BACKWARD instruction has been executed. A JUMP_BACKWARD instruction will only be in the bytecode when the bytecode represents a loop. This means that the modified tracing JIT will trace the interpret function and will only consider that the trace represents a loop when the bytecode itself represents a loop! Thus, a machine code loop will eventually be created that corresponds to the loop in the bytecode.

Let's look at at example. If we have a bytecode that corresponds to the following instructions:

pc | instruction ---+--------------------- 0 | LOAD_INTEGER 0 2 | DUP 3 | PRINT 4 | LOAD_INTEGER 1 6 | ADD 7 | JUMP_BACKWARD 6

This loop will print integers starting from 0 and going on from there. The modified tracing JIT will unroll the bytecode dispatch until it sees the JUMP_BACKWARD bytecode. After that bytecode the pc will be 2 again. Thus the earlier position key is repeated, which means that the loop will be closed. The produced machine code will do the equivalent of the following Java code:

...

guard_true(pc == 2)

guard_true(bytecode == "... correct bytecode string ...")

while (true) {

instruction = bytecode.charAt(pc);

pc += 1;

guard_true(instruction == DUP);

stack.push(stack.peek());

instruction = bytecode.charAt(pc);

pc += 1;

guard_true(instruction == PRINT);

do_print(stack.pop());

instruction = bytecode.charAt(pc);

pc += 1;

guard_true(instruction == LOAD_INTEGER)

value = (int)bytecode.charAt(pc);

pc += 1

stack.push(W_Integer(value))

instruction = bytecode.charAt(pc);

pc += 1;

guard_true(instruction == ADD)

arg2 = stack.pop()

arg1 = stack.pop()

stack.push(do_addition(arg1, arg2))

instruction = bytecode.charAt(pc);

pc += 1;

guard_true(instruction == JUMP_BACKWARD)

pc -= (int)bytecode.charAt(pc);

}

This is machine code that essentially does what the bytecode above did. Of course the code still remains some remnants of the interpreter (like the program counter manipulations, the stack handling, etc), which would have to be removed by some clever enough optimization step. If this were done, result would look a lot more natural.

Summary

If a tracing JIT is enhanced by a way to influence its loop-closing behaviour we can significantly improve its performance when the jitted program is itself an interpreter. The result is that in such a case the produced machine code will correspond to the functions that are being interpreted, not to the code of the interpreter itself.

Now, what does all this have to do with PyPy? What we are working on since a while is a sort of tracing JIT for RPython which allows to be customized with a function very similar to the add_to_position_key described above. This will make it possible to make the tracing JIT generate code that corresponds to the code that the interpreter interprets. For example, we would add a call to add_to_position_key to SPy, PyPy's Smalltalk VM. Then the tracing JIT will produce machine code for Smalltalk-level loops, with all the usual benefits of a tracing JIT (like inlining of intermediate methods, constant-folding, ...). This JIT differs from normal tracing JITs in that it also supports very powerful constant-folding and allocation-removal optimizations. Those optimizations will (hopefully) be the content of a later blog post.

The basics of this process have been working fine since quite a while. What the work currently focuses on is to improve the optimizers to remove not only the bytecode manipulation code, but also the stack handling, and a large number of other inefficiencies.

Wow, that's very cool! PyPy is an amazing novel project, but how did you guys ever think of this?

Very nice. You might want to have a look at Sullivan, et al (2003) Dynamic Native Optimization of Interpreters. They also identify the need to record not only the native PC but also the interpreter's virtual PC to identify useful trace heads. They provide three intrinsic functions (compared to your single add_to_position_key) to achieve this. Further, they provide three more intrinsics to support constant propagation.

Wow, that's extremely interesting! It's indeed very similar to what I describe in the blog post (apparently Armin knew of the paper, but I didn't). Of course they are severely hampered by the fact that the system is working on assembler level, so they don't really have enough information available to do really interesting optimizations.

The next Leysin Winter Sprint

PyPy Leysin Winter Sprint (14-21th April 2009)

The next PyPy sprint will be in Leysin, Switzerland, for the sixth time. This sprint will take place immediately after Easter. This is a fully public sprint: newcomers and topics other than those proposed below are welcome.

|

|

|

For more information see the announcement.

Wroclaw 2009 sprint progress report

Hello.

We have just finished probably the smallest sprint ever

in PyPy history. For most of the time it was just me

and Armin pairing together.

We also had a chance to work a bit with people from

the University, but there were definitely not enough

core developers to organize the work in a reasonable

manner. At some point we ended up having two pairs containing

four people each.

Jakub and Bartosz (who were our gentle hosts) worked

on getting PyPy's sandbox integrated with django.

It's still just an example what you can do (ie you

can do much more), but it's already interesting to look

at. The code can be found in user dir. This server (not yet online anywhere, sorry)

is able to run untrusted python code provided by user inside

a fully configurable sandbox.

We also implemented missing peepholer optimizations from

CPython, finding out that some peepholer tests were failing,

just because PyPy is optimizing better :-)

The main part of the sprint was work on JIT (most notable the fifth

generation of the JIT), which was moved

from the obscure directory in Carl's user in svn (which contains

branches these days!) into a PyPy branch. It's still very much

work in progress and a lot of pen and paper or handwaving was

involved, but we were able to implement a lot of basics in record time.

Right now we need a lot of rest after the exhaustive sprint,

but after that, stay tuned for more information about

progressing JIT!

Cheers,

fijal

Great to see both concrete work on the JIT and some practical applications for PyPy making progress. Keep up the good work.

See you at PyCon.

Wroclaw 2009 PyPy sprint and talk

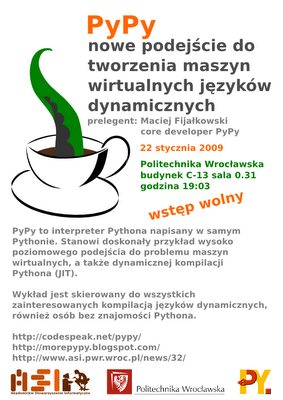

The next PyPy sprint will be held in Wrocław, Poland 7-14th February 2009. This is fully public sprint and all newcomers are welcomed. Preceeding the sprint there will be a talk at University of Technology in Wrocław held at 22nd of January.

For detailed info about the sprint, look here.

The talk will be a general, high-level overview about PyPy project. There is a very nice poster, made by Jakub Gustak and Bartosz Skowron (in polish):

Talk details:

Talk details:

- Location: Politechnika Wrocławska, budynek C-13, sala 0.31

- Date: 22nd January 2009, 19:00

- Language: very likely polish, although talk can be as well in english if some non-polish native would show up.

fijal

Pycon 2009

Hello.

Both of our PyPy talks has been accepted for Pycon US 2009. Although both are somehow related to PyPy, they're vastly different in topics, attitude and target audience.

The first one is a classic PyPy status talk - we'll mostly talk about our achievements from the last year (readers of this blog are aware of most, but not all :) as well as some general introduction and plans for the future.

The second one is about PyPy's sandboxing features. This is in my opinion a very underestimated feature, also by us, because it's not really well advertised or documented. The main purpose of the talk is to present to the general public how this works and how to use it. Hopefully we will get to work and publish about this a bit more ahead of Pycon already. Unlike Zope's Restricted Python, it provides you with the full python language, inside a fully virtualized sandbox, controlled from an external process by a custom security policy. Stay tuned for more :-)

See you at Pycon 2009!

Cheers,

fijal and holger

Can't wait to hear it, Fijal gave a fantastic talk last year and I'm excited for this year's as well. Really hoping it doesn't conflict with my panel :)

hi,

would you have somrthing to say about that ?:

https://www.python-forum.org/pythonforum/viewtopic.php?f=1&t=10744&sid=304ad6507b0db8420ae1df9f6c1522cd

thx

I'm not sure this is a Google project. It's hosted on Google code for sure, but anyone can do that.

All three of the primary developers are Google employees.

The LLVM supports JIT, so compiling Python into LLVM bytecode will give JIT for free.

Anonymous#1, this is extremely valuable note to take in an open source world, you know ;-)

PyPy folks, keep up the good work!

But... Sometimes I miss updates on this blog. Not in the sense that you slack on it, but in the sense that I miss some "technicaly intermediate" updates when there are no news on breathrouths.

One thing I miss most is the retro style articles on how some things that are "established" now got to be this way. The design by evolution things. Stuff that both educates and helps to get acquainted with the code one might like to hack one day.

I don't understand: These days, Google's v8 claims to be 56x faster than common javascript, tracemonkey is in the same league, as well as nitro, etc. Way before, psyco sped up python (theoretically) up to c's speed for algorithmic code, and up to 4x for common code.

Now Unladen Swallow aims to "only" 5x speed up. Isn't it to little, seeing what the above projects are getting nowadays?

Or am I getting confussed by their terminology? (what's exactly the meaning of 5x here?).

The exact meaning of 5x is I *think* "5x on tests derived from google internal apps". It's a bit little, but note that the great speedups of JS engines are for simple algorithmic code (the one that psyco speedups great).

It would be a good speedup for a lot of people though (of course we aim to speed up stuff according to JS engines ;)

Cheers,

fijal

Maciej, this is a reply posted on the project's FAQ page:

Comment by collinw, Today (8 hours ago)

luismgz: translating Python to Javascript would be easy to implement for about 80% of the language, but you'd hit a wall in implementing that last 20%. Just ask the Jython, PyPy? and IronPython? teams how hard 100% compatibility is. They've done some really heroic work to implement every dark and musty corner of the language, and I think they'd be the first to tell you that it's easy to get something like the Fibonacci function working, but things like metaclasses are a different story. We hope to side-step that by reusing as much of CPython as possible.

Psyco's claimed benefits of 100x speed-up on algorithmic code is rarely seen in real applications. It can certainly be used to optimize hotspots that fit Psyco's profile, but in examining the performance of some Google applications that use Psyco, we found that they see only a ~10% improvement in overall CPU usage. While that might be a valuable savings for some applications, it's not 100x, nor even the 2-4x low-end estimate that I've seen in the Psyco docs.

Are our performance goals too modest? We don't think so. Our team is small -- only two full-time engineers -- and we want to allow for unexpected surprises along the way. We feel that 5x is a good goal for the time being, especially given that we may need to make changes to LLVM along the way. If things go astoundingly well, we may raise those numbers, but for now, we're comfortable with our stated goals.

Maciej said: "It would be a good speedup for a lot of people though (of course we aim to speed up stuff according to JS engines ;)"

What ever happend to the "secret goal" of being faster than c...?