Using Tkinter and IDLE with PyPy

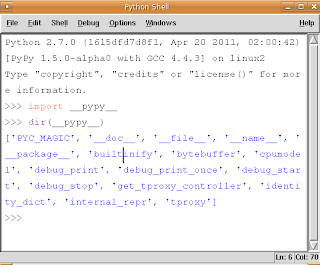

We are pleased to announce that Tkinter, the GUI library based on TCL/TK, now

works with PyPy.

Tkinter is composed of two parts:

- _tkinter, a module written in C which interfaces with the TCL world

- Tkinter, a pure Python package which wraps _tkinter to expose the pythonic API we are used to

At the moment, _tkinter is not distributed with PyPy because our build system does not support automatic compilation of C extension. Instead, it is necessary to install it manually, either directly from source or by easy_installing/pip installing tkinter-pypy from PyPI.

For everything to work correctly, you need a recent build of PyPy: the following is a step-by-step guide to install _tkinter in a PyPy nightly build for Linux 64 bit; for other architectures, look at the nightly build page:

$ wget https://buildbot.pypy.org/nightly/trunk/pypy-c-jit-43485-1615dfd7d8f1-linux64.tar.bz2 $ tar xfv pypy-c-jit-43485-1615dfd7d8f1-linux64.tar.bz2 $ cd pypy-c-jit-43485-1615dfd7d8f1-linux64/ $ wget https://peak.telecommunity.com/dist/ez_setup.py $ ./bin/pypy ez_setup.py # install setuptools $ ./bin/easy_install tkinter-pypyOnce you complete the steps above, you can start using Tkinter from your python programs. In particular, you can use IDLE, the IDE which is part of the Python standard library. To start IDLE, type:

$ ./bin/pypy -m idlelib.idleHave fun :-)

Tutorial Part 2: Adding a JIT

This is the second part of a tutorial written by Andrew Brown. The first part described how to write an interpreter with PyPy.

Adding JIT

Translating RPython to C is pretty cool, but one of the best features of PyPy is its ability to generate just-in-time compilers for your interpreter. That's right, from just a couple hints on how your interpreter is structured, PyPy will generate and include a JIT compiler that will, at runtime, translate the interpreted code of our BF language to machine code!

So what do we need to tell PyPy to make this happen? First it needs to know where the start of your bytecode evaluation loop is. This lets it keep track of instructions being executed in the target language (BF).

We also need to let it know what defines a particular execution frame. Since our language doesn't really have stack frames, this boils down to what's constant for the execution of a particular instruction, and what's not. These are called "green" and "red" variables, respectively.

Refer back to example2.py for the following.

In our main loop, there are four variables used: pc, program, bracket_map, and tape. Of those, pc, program, and bracket_map are all green variables. They define the execution of a particular instruction. If the JIT routines see the same combination of green variables as before, it knows it's skipped back and must be executing a loop. The variable "tape" is our red variable, it's what's being manipulated by the execution.

So let's tell PyPy this info. Start by importing the JitDriver class and making an instance:

from pypy.rlib.jit import JitDriver

jitdriver = JitDriver(greens=['pc', 'program', 'bracket_map'],

reds=['tape'])

And we add this line to the very top of the while loop in the mainloop function:

jitdriver.jit_merge_point(pc=pc, tape=tape, program=program,

bracket_map=bracket_map)

We also need to define a JitPolicy. We're not doing anything fancy, so this is all we need somewhere in the file:

def jitpolicy(driver):

from pypy.jit.codewriter.policy import JitPolicy

return JitPolicy()

See this example at example3.py

Now try translating again, but with the flag --opt=jit:

$ python ./pypy/pypy/translator/goal/translate.py --opt=jit example3.py

It will take significantly longer to translate with JIT enabled, almost 8 minutes on my machine, and the resulting binary will be much larger. When it's done, try having it run the mandelbrot program again. A world of difference, from 12 seconds compared to 45 seconds before!

Interestingly enough, you can see when the JIT compiler switches from interpreted to machine code with the mandelbrot example. The first few lines of output come out pretty fast, and then the program gets a boost of speed and gets even faster.

A bit about Tracing JIT Compilers

It's worth it at this point to read up on how tracing JIT compilers work. Here's a brief explanation: The interpreter is usually running your interpreter code as written. When it detects a loop of code in the target language (BF) is executed often, that loop is considered "hot" and marked to be traced. The next time that loop is entered, the interpreter gets put in tracing mode where every executed instruction is logged.

When the loop is finished, tracing stops. The trace of the loop is sent to an optimizer, and then to an assembler which outputs machine code. That machine code is then used for subsequent loop iterations.

This machine code is often optimized for the most common case, and depends on several assumptions about the code. Therefore, the machine code will contain guards, to validate those assumptions. If a guard check fails, the runtime falls back to regular interpreted mode.

A good place to start for more information is https://en.wikipedia.org/wiki/Just-in-time_compilation

Debugging and Trace Logs

Can we do any better? How can we see what the JIT is doing? Let's do two things.

First, let's add a get_printable_location function, which is used during debug trace logging:

def get_location(pc, program, bracket_map):

return "%s_%s_%s" % (

program[:pc], program[pc], program[pc+1:]

)

jitdriver = JitDriver(greens=['pc', 'program', 'bracket_map'], reds=['tape'],

get_printable_location=get_location)

This function is passed in the green variables, and should return a string. Here, we're printing out the BF code, surrounding the currently executing instruction with underscores so we can see where it is.

Download this as example4.py and translate it the same as example3.py.

Now let's run a test program (test.b, which just prints the letter "A" 15 or so times in a loop) with trace logging:

$ PYPYLOG=jit-log-opt:logfile ./example4-c test.b

Now take a look at the file "logfile". This file is quite hard to read, so here's my best shot at explaining it.

The file contains a log of every trace that was performed, and is essentially a glimpse at what instructions it's compiling to machine code for you. It's useful to see if there are unnecessary instructions or room for optimization.

Each trace starts with a line that looks like this:

[3c091099e7a4a7] {jit-log-opt-loop

and ends with a line like this:

[3c091099eae17d jit-log-opt-loop}

The next line tells you which loop number it is, and how many ops are in it. In my case, the first trace looks like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

[3c167c92b9118f] {jit-log-opt-loop

# Loop 0 : loop with 26 ops

[p0, p1, i2, i3]

debug_merge_point('+<[>[_>_+<-]>.[<+>-]<<-]++++++++++.', 0)

debug_merge_point('+<[>[>_+_<-]>.[<+>-]<<-]++++++++++.', 0)

i4 = getarrayitem_gc(p1, i2, descr=<SignedArrayDescr>)

i6 = int_add(i4, 1)

setarrayitem_gc(p1, i2, i6, descr=<SignedArrayDescr>)

debug_merge_point('+<[>[>+_<_-]>.[<+>-]<<-]++++++++++.', 0)

debug_merge_point('+<[>[>+<_-_]>.[<+>-]<<-]++++++++++.', 0)

i7 = getarrayitem_gc(p1, i3, descr=<SignedArrayDescr>)

i9 = int_sub(i7, 1)

setarrayitem_gc(p1, i3, i9, descr=<SignedArrayDescr>)

debug_merge_point('+<[>[>+<-_]_>.[<+>-]<<-]++++++++++.', 0)

i10 = int_is_true(i9)

guard_true(i10, descr=<Guard2>) [p0]

i14 = call(ConstClass(ll_dict_lookup__dicttablePtr_Signed_Signed), ConstPtr(ptr12), 90, 90, descr=<SignedCallDescr>)

guard_no_exception(, descr=<Guard3>) [i14, p0]

i16 = int_and(i14, -9223372036854775808)

i17 = int_is_true(i16)

guard_false(i17, descr=<Guard4>) [i14, p0]

i19 = call(ConstClass(ll_get_value__dicttablePtr_Signed), ConstPtr(ptr12), i14, descr=<SignedCallDescr>)

guard_no_exception(, descr=<Guard5>) [i19, p0]

i21 = int_add(i19, 1)

i23 = int_lt(i21, 114)

guard_true(i23, descr=<Guard6>) [i21, p0]

guard_value(i21, 86, descr=<Guard7>) [i21, p0]

debug_merge_point('+<[>[_>_+<-]>.[<+>-]<<-]++++++++++.', 0)

jump(p0, p1, i2, i3, descr=<Loop0>)

[3c167c92bc6a15] jit-log-opt-loop}

|

I've trimmed the debug_merge_point lines a bit, they were really long.

So let's see what this does. This trace takes 4 parameters: 2 object pointers (p0 and p1) and 2 integers (i2 and i3). Looking at the debug lines, it seems to be tracing one iteration of this loop: "[>+<-]"

It starts executing the first operation on line 4, a ">", but immediately starts executing the next operation. The ">" had no instructions, and looks like it was optimized out completely. This loop must always act on the same part of the tape, the tape pointer is constant for this trace. An explicit advance operation is unnecessary.

Lines 5 to 8 are the instructions for the "+" operation. First it gets the array item from the array in pointer p1 at index i2 (line 6), adds 1 to it and stores it in i6 (line 7), and stores it back in the array (line 8).

Line 9 starts the "<" instruction, but it is another no-op. It seems that i2 and i3 passed into this routine are the two tape pointers used in this loop already calculated. Also deduced is that p1 is the tape array. It's not clear what p0 is.

Lines 10 through 13 perform the "-" operation: get the array value (line 11), subtract (line 12) and set the array value (line 13).

Next, on line 14, we come to the "]" operation. Lines 15 and 16 check whether i9 is true (non-zero). Looking up, i9 is the array value that we just decremented and stored, now being checked as the loop condition, as expected (remember the definition of "]"). Line 16 is a guard, if the condition is not met, execution jumps somewhere else, in this case to the routine called <Guard2> and is passed one parameter: p0.

Assuming we pass the guard, lines 17 through 23 are doing the dictionary lookup to bracket_map to find where the program counter should jump to. I'm not too familiar with what the instructions are actually doing, but it looks like there are two external calls and 3 guards. This seems quite expensive, especially since we know bracket_map will never change (PyPy doesn't know that). We'll see below how to optimize this.

Line 24 increments the newly acquired instruction pointer. Lines 25 and 26 make sure it's less than the program's length.

Additionally, line 27 guards that i21, the incremented instruction pointer, is exactly 86. This is because it's about to jump to the beginning (line 29) and the instruction pointer being 86 is a precondition to this block.

Finally, the loop closes up at line 28 so the JIT can jump to loop body <Loop0> to handle that case (line 29), which is the beginning of the loop again. It passes in parameters (p0, p1, i2, i3).

Optimizing

As mentioned, every loop iteration does a dictionary lookup to find the corresponding matching bracket for the final jump. This is terribly inefficient, the jump target is not going to change from one loop to the next. This information is constant and should be compiled in as such.

The problem is that the lookups are coming from a dictionary, and PyPy is treating it as opaque. It doesn't know the dictionary isn't being modified or isn't going to return something different on each query.

What we need to do is provide another hint to the translation to say that the dictionary query is a pure function, that is, its output depends only on its inputs and the same inputs should always return the same output.

To do this, we use a provided function decorator pypy.rlib.jit.purefunction, and wrap the dictionary call in a decorated function:

@purefunction

def get_matching_bracket(bracket_map, pc):

return bracket_map[pc]

This version can be found at example5.py

Translate again with the JIT option and observe the speedup. Mandelbrot now only takes 6 seconds! (from 12 seconds before this optimization)

Let's take a look at the trace from the same function:

[3c29fad7b792b0] {jit-log-opt-loop

# Loop 0 : loop with 15 ops

[p0, p1, i2, i3]

debug_merge_point('+<[>[_>_+<-]>.[<+>-]<<-]++++++++++.', 0)

debug_merge_point('+<[>[>_+_<-]>.[<+>-]<<-]++++++++++.', 0)

i4 = getarrayitem_gc(p1, i2, descr=<SignedArrayDescr>)

i6 = int_add(i4, 1)

setarrayitem_gc(p1, i2, i6, descr=<SignedArrayDescr>)

debug_merge_point('+<[>[>+_<_-]>.[<+>-]<<-]++++++++++.', 0)

debug_merge_point('+<[>[>+<_-_]>.[<+>-]<<-]++++++++++.', 0)

i7 = getarrayitem_gc(p1, i3, descr=<SignedArrayDescr>)

i9 = int_sub(i7, 1)

setarrayitem_gc(p1, i3, i9, descr=<SignedArrayDescr>)

debug_merge_point('+<[>[>+<-_]_>.[<+>-]<<-]++++++++++.', 0)

i10 = int_is_true(i9)

guard_true(i10, descr=<Guard2>) [p0]

debug_merge_point('+<[>[_>_+<-]>.[<+>-]<<-]++++++++++.', 0)

jump(p0, p1, i2, i3, descr=<Loop0>)

[3c29fad7ba32ec] jit-log-opt-loop}

Much better! Each loop iteration is an add, a subtract, two array loads, two array stores, and a guard on the exit condition. That's it! This code doesn't require any program counter manipulation.

I'm no expert on optimizations, this tip was suggested by Armin Rigo on the pypy-dev list. Carl Friedrich has a series of posts on how to optimize your interpreter that are also very useful: https://bit.ly/bundles/cfbolz/1

Final Words

I hope this has shown some of you what PyPy is all about other than a faster implementation of Python.

For those that would like to know more about how the process works, there are several academic papers explaining the process in detail that I recommend. In particular: Tracing the Meta-Level: PyPy's Tracing JIT Compiler.

See https://readthedocs.org/docs/pypy/en/latest/extradoc.html

Some interpreters are written to evaluate directly from the AST. i.e. they never generate bytecode, instead each node in the ast simply has the code to execute it as a "virtual" function. Could PyPy JIT such an interpreter? Or does it essentially assume a bytecode based interpreter?

In theory it should be able to, if it's written in RPython. Perhaps it would be harder to place the hints for the jit engine?

As far as I understand it, it still traces some kind of bytecode (generated from the RPython code), but uses the can_enter_jit hints to determine what to trace and the length of a trace.

If it'll be fast is another question though. Why not give it a try? (E.g. one could implement the LLVM kaleidoscope language in RPython.)

@Winston in theory nothing prevents JIT from working on AST-based interpreters. In practice however, it would require a bit of engineering to convince the JIT that the green (constant) argument is a complex object structure. That's however just engineering

It's actually not a problem at all to have an AST-based interpreter. In fact, the Prolog uses "ASTs" (Prolog is homoiconic, so the ASTs are just Prologs normal data structures).

Maciej: that's not a problem if your ASTs are actually immutable. If they aren't you have a problem which indeed requires some engineering.

The effect of the loop "[>+<-]" is

tape[position+1] += tape[position]

tape[position] = 0

We saw that PyPy can optimize the program counter away in this loop--but this loop could be executed in constant time. Will PyPy ever be able to optimize it to that degree?

Well, you finally motivated me to give it a try. I optimized the BF example and managed to get some pretty nice speed boosts all without dipping into the low level (aside from reading the log)

Great tutorial, but where can I find the 'test.b' file (mentioned for the tracing JIT) for a try?

hi guys. can jit merge points not be put inside methods? Going off example3.py, if I take the body of the while loop and move it into a method of the Tape class (along with the jitdriver), all the speed gains go away. can anyone explain why this happens? Thanks!

Tutorial: Writing an Interpreter with PyPy, Part 1

This is a guest blog post written by Andrew Brown, with help from the PyPy developers on the pypy-dev mailing list.

This tutorial's master copy and supporting files live at https://bitbucket.org/brownan/pypy-tutorial/

When I first learned about the PyPy project, it took me a while to figure out exactly what it was about. For those that don't already know, it's two things:

- A set of tools for implementing interpreters for interpreted languages

- An implementation of Python using this toolchain

The second part is probably what most people think PyPy is, but this tutorial is not about their Python interpreter. It is about writing your own interpreter for your own language.

This is the project I undertook to help myself better understand how PyPy works and what it's all about.

This tutorial assumes you know very little about PyPy, how it works, and even what it's all about. I'm starting from the very beginning here.

What PyPy Does

Here's a brief overview of what PyPy can do. Let's say you want to write an interpreted language. This involves writing some kind of source code parser, a bytecode interpretation loop, and lots of standard library code.

That's quite a bit of work for moderately complicated languages, and there's a lot of low level work involved. Writing the parser and compiler code usually isn't fun, that's why there are tools out there to generate parsers and compilers for you.

Even then, you still must worry about memory management in your interpreter, and you're going to be re-implementing a lot if you want data types like arbitrary precision integers, nice general hash tables, and such. It's enough to put someone off from implementing their idea for a language.

Wouldn't it be nice if you could write your language in an existing high level language like, for example, Python? That sure would be ideal, you'd get all the advantages of a high level language like automatic memory management and rich data types at your disposal. Oh, but an interpreted language interpreting another language would be slow, right? That's twice as much interpreting going on.

As you may have guessed, PyPy solves this problem. PyPy is a sophisticated toolchain for analyzing and translating your interpreter code to C code (or JVM or CLI). This process is called "translation", and it knows how to translate quite a lot of Python's syntax and standard libraries, but not everything. All you have to do is write your interpreter in RPython, a subset of the Python language carefully defined to allow this kind of analysis and translation, and PyPy will produce for you a very efficient interpreter.

Because efficient interpreters should not be hard to write.

The Language

The language I've chosen to implement is dead simple. The language runtime consists of a tape of integers, all initialized to zero, and a single pointer to one of the tape's cells. The language has 8 commands, described here:

- >

- Moves the tape pointer one cell to the right

- <

- Moves the tape pointer one cell to the left

- +

- Increments the value of the cell underneath the pointer

- -

- Decrements the value of the cell underneath the pointer

- [

- If the cell under the current pointer is 0, skip to the instruction after the matching ]

- ]

- Skip back to the matching [ (evaluating its condition)

- .

- Print out a single byte to stdout from the cell under the pointer

- ,

- Read in a single byte from stdin to the cell under the pointer

Any unrecognized bytes are ignored.

Some of you may recognize this language. I will be referring to it as BF.

One thing to notice is that the language is its own bytecode; there is no translation from source code to bytecode. This means that the language can be interpreted directly: the main eval loop of our interpreter will operate right on the source code. This simplifies the implementation quite a bit.

First Steps

Let's start out by writing a BF interpreter in plain old Python. The first step is sketching out an eval loop:

def mainloop(program):

tape = Tape()

pc = 0

while pc < len(program):

code = program[pc]

if code == ">":

tape.advance()

elif code == "<":

tape.devance()

elif code == "+":

tape.inc()

elif code == "-":

tape.dec()

elif code == ".":

sys.stdout.write(chr(tape.get()))

elif code == ",":

tape.set(ord(sys.stdin.read(1)))

elif code == "[" and value() == 0:

# Skip forward to the matching ]

elif code == "]" and value() != 0:

# Skip back to the matching [

pc += 1

As you can see, a program counter (pc) holds the current instruction index. The first statement in the loop gets the instruction to execute, and then a compound if statement decides how to execute that instruction.

The implementation of [ and ] are left out here, but they should change the program counter to the value of the matching bracket. (The pc then gets incremented, so the condition is evaluated once when entering a loop, and once at the end of each iteration)

Here's the implementation of the Tape class, which holds the tape's values as well as the tape pointer:

class Tape(object):

def __init__(self):

self.thetape = [0]

self.position = 0

def get(self):

return self.thetape[self.position]

def set(self, val):

self.thetape[self.position] = val

def inc(self):

self.thetape[self.position] += 1

def dec(self):

self.thetape[self.position] -= 1

def advance(self):

self.position += 1

if len(self.thetape) <= self.position:

self.thetape.append(0)

def devance(self):

self.position -= 1

As you can see, the tape expands as needed to the right, indefinitely. We should really add some error checking to make sure the pointer doesn't go negative, but I'm not worrying about that now.

Except for the omission of the "[" and "]" implementation, this code will work fine. However, if the program has a lot of comments, it will have to skip over them one byte at a time at runtime. So let's parse those out once and for all.

At the same time, we'll build a dictionary mapping between brackets, so that finding a matching bracket is just a single dictionary lookup. Here's how:

def parse(program):

parsed = []

bracket_map = {}

leftstack = []

pc = 0

for char in program:

if char in ('[', ']', '<', '>', '+', '-', ',', '.'):

parsed.append(char)

if char == '[':

leftstack.append(pc)

elif char == ']':

left = leftstack.pop()

right = pc

bracket_map[left] = right

bracket_map[right] = left

pc += 1

return "".join(parsed), bracket_map

This returns a string with all invalid instructions removed, and a dictionary mapping bracket indexes to their matching bracket index.

All we need is some glue code and we have a working BF interpreter:

def run(input):

program, map = parse(input.read())

mainloop(program, map)

if __name__ == "__main__":

import sys

run(open(sys.argv[1], 'r'))

If you're following along at home, you'll also need to change the signature of mainloop() and implement the bracket branches of the if statement. Here's the complete example: example1.py

At this point you can try it out to see that it works by running the interpreter under python, but be warned, it will be very slow on the more complex examples:

$ python example1.py 99bottles.b

You can find mandel.b and several other example programs (not written by me) in my repository.

PyPy Translation

But this is not about writing a BF interpreter, this is about PyPy. So what does it take to get PyPy to translate this into a super-fast executable?

As a side note, there are some simple examples in the pypy/translator/goal directory of the PyPy source tree that are helpful here. My starting point for learning this was the example "targetnopstandalone.py", a simple hello world for PyPy.

For our example, the module must define a name called "target" which returns the entry point. The translation process imports your module and looks for that name, calls it, and the function object returned is where it starts the translation.

def run(fp):

program_contents = ""

while True:

read = os.read(fp, 4096)

if len(read) == 0:

break

program_contents += read

os.close(fp)

program, bm = parse(program_contents)

mainloop(program, bm)

def entry_point(argv):

try:

filename = argv[1]

except IndexError:

print "You must supply a filename"

return 1

run(os.open(filename, os.O_RDONLY, 0777))

return 0

def target(*args):

return entry_point, None

if __name__ == "__main__":

entry_point(sys.argv)

The entry_point function is passed the command line arguments when you run the resulting executable.

A few other things have changed here too. See the next section...

About RPython

Let's talk a bit about RPython at this point. PyPy can't translate arbitrary Python code because Python is a bit too dynamic. There are restrictions on what standard library functions and what syntax constructs one can use. I won't be going over all the restrictions, but for more information see https://readthedocs.org/docs/pypy/en/latest/coding-guide.html#restricted-python

In the example above, you'll see a few things have changed. I'm now using low level file descriptors with os.open and os.read instead of file objects. The implementation of "." and "," are similarly tweaked (not shown above). Those are the only changes to make to this code, the rest is simple enough for PyPy to digest.

That wasn't so hard, was it? I still get to use dictionaries, expandable lists, and even classes and objects! And if low level file descriptors are too low for you, there are some helpful abstractions in the rlib.streamio module included with PyPy's "RPython standard library."

For the example thus far, see example2.py

Translating

If you haven't already, check yourself out the latest version of PyPy from their bitbucket.org repository:

$ hg clone https://bitbucket.org/pypy/pypy

(A recent revision is necessary because of a bugfix that makes my example possible)

The script to run is in "pypy/translator/goal/translate.py". Run this script, passing in our example module as an argument.

[A note added much later: this script has been moved to "rpython/bin/rpython".]

$ python ./pypy/pypy/translator/goal/translate.py example2.py

(You can use PyPy's python interpreter for extra speed, but it's not necessary)

PyPy will churn for a bit, drawing some nice looking fractals to your console while it works. It takes around 20 seconds on my machine.

The result from this is an executable binary that interprets BF programs. Included in my repository are some example BF programs, including a mandelbrot fractal generator, which takes about 45 seconds to run on my computer. Try it out:

$ ./example2-c mandel.b

Compare this to running the interpreter un-translated on top of python:

$ python example2.py mandel.b

Takes forever, doesn't it?

So there you have it. We've successfully written our own interpreter in RPython and translated it with the PyPy toolchain.

(more in the next blog post...)

Hmmmmmm, yum.

I'm going to translate this into Chinese, if you don't mind?

On my Ubuntu 10.10 laptop, the PyPy BF interpreter ran hanoi in ~20 sec and mandel in ~40 sec. By comparison, the beef BF interpreter (written in C) ran these in ~10 and ~20 sec., respectively. Not too shabby, PyPy.

Nice article though I'm really missing a simple benchmark between the python interpreter and the pypy interpreter. "Takes forever" vs "45 seconds" isn't as awesome of a conclusion as I'd hoped for.

@temptemptemp13: I think you are missing something much more substantial. This article is not about Python at all. It is about how to use the PyPy toolchain to implement a different language - in this case the brainfuck programming language.

While BF isn't a very useful language, it has the nice properties of being very small. Almost all of the language fits in a blog post.

I like how this article became family-friendly by actually avoiding calling BF by its name :-)

Amazing! Thanks for posting. I was wondering, what's about a pure C or C++ implementations, as close as reasonable to the python one? So I wrote them. You can read more details here, but the bottom line is that PyPy is (marginally) faster than C++, and (marginally) slower than C :-O

@Davide: you should compare your C version against the PyPy version WITH the JIT, as explained here:

https://morepypy.blogspot.com/2011/04/tutorial-part-2-adding-jit.html

I bet that PyPy will easily win :-)

Nice post. I just want to report that I tried running

/usr/share/pypy-1.6/pypy/translator/goal/translate.py example2.py

and got the following error.

This is with an Ubuntu 1.7 pypy package rebuilt on Debian squeeze (the 1.6 is a typo, it should be 1.7).

[translation:ERROR] Error:

[translation:ERROR] Traceback (most recent call last):

[translation:ERROR] File "/usr/share/pypy-1.6/pypy/translator/goal/translate.py", line 308, in main

[translation:ERROR] drv.proceed(goals)

[translation:ERROR] File "/usr/share/pypy-1.6/pypy/translator/driver.py", line 809, in proceed

[translation:ERROR] return self._execute(goals, task_skip = self._maybe_skip())

[translation:ERROR] File "/usr/share/pypy-1.6/pypy/translator/tool/taskengine.py", line 116, in _execute

[translation:ERROR] res = self._do(goal, taskcallable, *args, **kwds)

[translation:ERROR] File "/usr/share/pypy-1.6/pypy/translator/driver.py", line 286, in _do

[translation:ERROR] res = func()

[translation:ERROR] File "/usr/share/pypy-1.6/pypy/translator/driver.py", line 441, in task_backendopt_lltype

[translation:ERROR] from pypy.translator.backendopt.all import backend_optimizations

[translation:ERROR] File "/usr/share/pypy-1.6/pypy/translator/backendopt/all.py", line 2, in

[translation:ERROR] from pypy.translator.backendopt import removenoops

[translation:ERROR] File "/usr/share/pypy-1.6/pypy/translator/backendopt/removenoops.py", line 5, in

[translation:ERROR] from pypy import conftest

[translation:ERROR] File "/usr/share/pypy-1.6/pypy/conftest.py", line 1, in

[translation:ERROR] import py, pytest, sys, os, textwrap, types

[translation:ERROR] ImportError: No module named pytest

[translation] start debugger...

> /usr/share/pypy-1.6/pypy/conftest.py(1)()

-> import py, pytest, sys, os, textwrap, types

(Pdb+)

So, it looks like pytest needs to be installed. This does not appear to be available as a Debian package.

Regards, Faheem Mitha

(faheem at faheem dot info)

This is a great post for anyone interested in programming languages :) Great post!

Now, with os.read() and os.write():

[translation:ERROR] Error:

[translation:ERROR] Traceback (most recent call last):

[translation:ERROR] File "/opt/local/lib/pypy/src/pypy-pypy-07e08e9c885c/pypy/translator/goal/translate.py", line 303, in main

[translation:ERROR] drv.proceed(goals)

[translation:ERROR] File "/opt/local/lib/pypy-2.0-b1/src/pypy-pypy-07e08e9c885c/pypy/translator/driver.py", line 771, in proceed

[translation:ERROR] return self._execute(goals, task_skip = self._maybe_skip())

[translation:ERROR] File "/opt/local/lib/pypy-2.0-b1/src/pypy-pypy-07e08e9c885c/pypy/translator/tool/taskengine.py", line 116, in _execute

[translation:ERROR] res = self._do(goal, taskcallable, *args, **kwds)

[translation:ERROR] File "/opt/local/lib/pypy-2.0-b1/src/pypy-pypy-07e08e9c885c/pypy/translator/driver.py", line 283, in _do

[translation:ERROR] res = func()

[translation:ERROR] File "/opt/local/lib/pypy-2.0-b1/src/pypy-pypy-07e08e9c885c/pypy/translator/driver.py", line 319, in task_annotate

[translation:ERROR] s = annotator.build_types(self.entry_point, self.inputtypes)

[translation:ERROR] File "/opt/local/lib/pypy-2.0-b1/src/pypy-pypy-07e08e9c885c/pypy/annotation/annrpython.py", line 89, in build_types

[translation:ERROR] return self.build_graph_types(flowgraph, inputcells, complete_now=complete_now)

[translation:ERROR] File "/opt/local/lib/pypy-2.0-b1/src/pypy-pypy-07e08e9c885c/pypy/annotation/annrpython.py", line 142, in build_graph_types

[translation:ERROR] self.complete()

[translation:ERROR] File "/opt/local/lib/pypy-2.0-b1/src/pypy-pypy-07e08e9c885c/pypy/annotation/annrpython.py", line 217, in complete

[translation:ERROR] raise AnnotatorError(text)

[translation:ERROR] AnnotatorError: -+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

[translation:ERROR] Blocked block -- operation cannot succeed

[translation:ERROR]

[translation:ERROR] v1 = ord(v0)

[translation:ERROR] In :

[translation:ERROR] Happened at file /Users/cacilhas/Workspace/Personal/brainfuck/src/brainfuck/parser.py line 29

[translation:ERROR]

[translation:ERROR] ==> tape.set(ord(os.read(0, 1)))

[translation:ERROR]

[translation:ERROR] Known variable annotations:

[translation:ERROR] v0 = SomeString(can_be_None=True)

I think that your "," implementation is incorrect, os.read returns an empty string on EOF, thus [0] triggers an exception.

According to Wikipedia, setting the cell to 0, -1 or leaving the cell unchanged each may be used to tell EOF apart from other characters.

I followed this tutorial again several years later :) (just for fun) using the newly published rpython toolchain now available up on PyPi. You can now just: pip install rpython -- I also wanted to point out that recent versions of the RPython toolchain have made advances in what it can translate it seems; specifically I did not need to change the open(...).read() parts to lower level os.read() calls.

PyPy Göteborg Post-Easter Sprint April 25 - May 1 2011

The next PyPy sprint will be in Gothenburg, Sweden. It is a public sprint, very suitable for newcomers. We'll focus on making the 1.5 release (if it hasn't already happened) and whatever interests the Sprint attendees.

Topics and goals

The main goal is to polish and release PyPy 1.5, supporting Python 2.7 as well as the last few months' improvements in the JIT (provided that it hasn't already happened). Other topics:

- Going over our documentation, and classifying our docs in terms of mouldiness. Deciding what needs writing, and maybe writing it.

- Helping people get their code running with PyPy

- maybe work on EuroPython Training, and talks

- Summer of Code preparation

- speed.pypy.org

- any other programming task is welcome too -- e.g. tweaking the Python or JavaScript interpreter, Stackless support, and so on.

Location

The sprint will be held in the apartment of Laura Creighton and Jacob Hallén which is at Götabergsgatan 22 in Gothenburg, Sweden. Here is a map. This is in central Gothenburg. It is between the tram stops of Vasaplatsen and Valand, (a distance of 4 blocks) where many lines call -- the 2, 3, 4, 5, 7, 10 and 13.

Probably cheapest and not too far away is to book accomodation at SGS Veckobostader. The Elite Park Avenyn Hotel is a luxury hotel just a few blocks away. There are scores of hotels a short walk away from the sprint location, suitable for every budget, desire for luxury, and desire for the unusual. You could, for instance, stay on a boat. Options are too numerous to go into here. Just ask in the mailing list or on the blog.

Hours will be from 10:00 until people have had enough. It's a good idea to arrive a day before the sprint starts and leave a day later. In the middle of the sprint there usually is a break day and it's usually ok to take half-days off if you feel like it.

Good to Know

Sweden is not part of the Euro zone. One SEK (krona in singular, kronor in plural) is roughly 1/10th of a Euro (9.36 SEK to 1 Euro).

The venue is central in Gothenburg. There is a large selection of places to get food nearby, from edible-and-cheap to outstanding. We often cook meals together, so let us know if you have any food allergies, dislikes, or special requirements.

Sweden uses the same kind of plugs as Germany. 230V AC.

The Sprint will be held the week following Easter. This means, as always, that Gothcon will be taking place the weekend before (Easter weekend). Gothcon, now in its 35 year, is the largest European game players conference. Some of you may be interested in arriving early for the board games. The conference site is only in Swedish, alas. You don't need to register in advance unless you are planning to host a tournament, (and it's too late for that anyway).

Getting Here

If are coming train, you will arrive at the Central Station. It is about 12 blocks to the site from there, or you can take a tram.

There are two airports which are local to Göteborg, Landvetter (the main one) and Gothenburg City Airport (where some budget airlines fly). If you arrive at Landvetter the airport bus stops right downtown at Elite Park Avenyn Hotel which is the second stop, 4 blocks from the Sprint site, as well as the end of the line, which is the Central Station. If you arrive at Gothenburg City Airport take the bus to the end of the line. You will be at the Central Station.

You can also arrive by ferry, from either Kiel in Germany or Frederikshavn in Denmark.

Who's Coming?

If you'd like to come, please let us know when you will be arriving and leaving, as well as letting us know your interests We'll keep a list of people which we'll update (which you can do so yourself if you have bitbucket pypy commit rights).

"e.g. tweaking the Python or JavaScript interpreter"

Are you implying that PyPy has a JavaScript interpreter now?

It had one since a few years. It's not complete though: https://bitbucket.org/pypy/lang-js/overview

Controlling the Tracing of an Interpreter With Hints, Part 4: Benchmarks

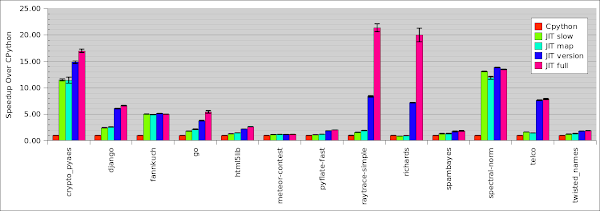

This is part 4 and the final part of the series on how to speed up an interpreter written with PyPy by adding JIT hints to the interpreter. Part 1 described how to control the extent of tracing. Part 2 described how to influence the optimizer with promotion and pure functions. Part 3 described a simple object model and how it can be optimized by doing small rewrites. In this (short) post I present some benchmarks.

Benchmarks

For the benchmarks I ran a subset of the benchmarks on https://speed.pypy.org with CPython and four different executables of PyPy's Python interpreter (all with a JIT). The executables contain all combinations of enabling maps (which make instance attributes fast) and type versions (which makes method lookup fast).

- pypy-slow: contains neither maps nor type versions.

- pypy-map: contains maps but not type versions.

- pypy-version: contains type versions but not maps.

- pypy-full: contains both maps and type versions

The results are as follows:

The graph shows the speedup over CPython's numbers. The results are quite interesting. Maps by themselves do not speed up much over the bare JIT, whereas typed versions alone improve on the JIT baseline in many cases. However, maps are not useless. In combination with type versions they add a nice improvement over just type versions in a number of benchmarks (most notably raytrace-simple and richards but also in crypto-pyaes, django and go).

It's clear that type versions can be arbitrarily effective. A method lookup on a class can be arbitrarily slow, if the inheritance hierarchy becomes deeper and deeper. The full lookup is replaced by one promotion if type versions are enabled.

Maps on the other hand always replace one dict lookup with one promotion. Since dict lookups are already very fast, this by itself does not lead to a gigantic improvement. Only in combination with type versions do they show their full potential.

It's not clear to me why version + maps combine so well. Maps should effectively eliminate lookups on the instance dict and versions eliminate lookups on the class dict. Both versions would seem to eliminate different classes of lookups, so I'm not seeing why we have dramatic improvement when using them together.

I'm not an expert at CPU architecture, but ISTM eliminating both can eliminate a large number of memory reads which would help with pipelining and other very low level optimizations.

@Winston: I actually have no clue :-). The numbers are hard to deny though. I plan to stare at the traces a bit next week, can comment here if I find something interesting.

@Winston: ok, I probably found out. Your reasoning is too simple because usually you do several lookups on the same object in a row. Every lookup looks first in the class, then in the instance. So it looks a bit like this:

lookup name1 in obj.__class__

lookup name1 in obj.__dict__

lookup name2 in obj.__class__

lookup name2 in obj.__dict__

lookup name2 in obj.__class__

lookup name2 in obj.__dict__

when using maps, every lookup in the dict is simply reading the map, promoting it and then a read. after the promotion of the map, the instance's layout is fully known. however, if type versions are disabled, the lookups in the class are complex operations that are opaque to the JIT. Therefore the JIT assumes they can change the layout and thus the map of the object.

If you also enable type versions, then the class lookups are understandable to the JIT. therefore the JIT can see that the class lookup didn't change the layout of the class. This means that after the first instance lookup, the following instance lookups cost nothing at all.

I think an important improvement brought about by maps is the memory footprint reduction.

It won't matter all the time, but it makes all classes as space-efficient as if they used __slots__, all automagically, which is no small thing.

For programs that handle lots of small objects, this can really make a difference, in memory consumption and speed (less memory to shuffle around will invariably be faster)

Perhaps the benchmark suite doesn't have enough of those cases.

@cfbolz I think one reason why maps+version tags are fast is because we lack jit.unroll_safe on several lookup functions when version tags are disabled. Marking them as unrollable would speed things up.

The reasoning behind this is that old style classes which have maps, but no version tags are much faster than new style classes with version tags disabled.

Thanks for taking the time to answer my query.

The use of class versions eliminates the opaque function being called because the JIT knows the return will be constant. This allows optimizations to work correctly. But this makes me wonder how much of the improvement is due to class versions and how much is due to lack of opaqueness.

At any rate, I always find the posts on this blog very interesting. It definitely some neat stuff you are doing here.

@fijal I thought old-style classes had celldicts? That's yet another thing, but your point is still correct.

I'd love to see a blog post about conventions to favor or avoid while writing python code to best take advantage of these excellent features. For example, your previous post implied something like this would be faster than changing the class directly:

class Counter(object):

....def __init__(self):

........self.count = 0

....def increment(self):

........self.count += 1

class Many(object):

....counter = Counter()

....def __init__(self):

........self.counter.increment()

Granted, it would be preferable, from a coding standpoint, to just use a simple class attribute, but the adaptations that would likely work best for the pypy JIT seem like far smaller divergences from the 'ideal' python than many other lengths people go to when coding for speed, particularly compared to something like cython.

A thank you to the PSF

This year's PyCon was an incredible time; several members of the PyPy team were there, and we'll be blogging more about our experiences in the coming days. However, we quickly wanted to extend a thank you to the Python Software Foundation (PSF).

As you may have heard, on Friday morning at PyCon Jesse Noller handed the PyPy team a check for $10,000, on behalf of the PSF. This was in recognition of our success over the past few years in bringing PyPy from a research project to a fast, compliant, production-ready Python implementation, and to allow us to continue our work on making it faster and more up-to-date with upstream version changes.

Beyond the large check, we're grateful for the endorsement this represents, not only of our work on PyPy, but also of all alternatve Python VMs. The PSF has shifted its focus from representing just CPython to representing the Python Language, reguardless of its implementation, something we are very appreciative of.

From left to right, PyPy people present at PyCon 2011: Maciej Fijałkowski, Armin Rigo, Alex Gaynor, Laura Creighton and Jacob Hallén

Thank you, PSF.

Congratulations! It's great to see the PSF embracing the broader Python ecosystem.

It's nice to be able to offer this support as an indication that we aren't just the CPython Software Foundation. It is a well-deserved award, and we know it will be put to good use.

Controlling the Tracing of an Interpreter With Hints, Part 3: Putting it All Together

This is part 3 of the series on how to speed up an interpreter written with PyPy by adding JIT hints to the interpreter. Part 1 described how to control the extent of tracing. Part 2 described how to influence the optimizer with promotion and pure functions. In this post I describe a worked-out example of a small object model for a dynamic language and how to make it efficient using the hints described in the previous posts.

A Simple Object Model

To implement a dynamic language efficiently, the operations on its objects need to be fast. Most dynamic languages have object models that are made by using dictionaries everywhere. Let's look at an example of how the JIT can be made to optimize such operations.

For the purpose of this blog post we will use a very simple and bare-bones object model that just supports very simple classes and instances, without any inheritance or any fancy features. The model has classes, which contain methods. Instances have a class. Instances have their own attributes. When looking up an attribute on an instance, the instances attributes are searched. If the attribute is not found there, the class' attributes are searched.

To implement this object model, we could use the following RPython code as part of the interpreter source code:

class Class(object):

def __init__(self, name):

self.name = name

self.methods = {}

def instantiate(self):

return Instance(self)

def find_method(self, name):

result = self.methods.get(name)

if result is not None:

return result

raise AttributeError(name)

def change_method(self, name, value):

self.methods[name] = value

class Instance(object):

def __init__(self, cls):

self.cls = cls

self.attributes = {}

def getfield(self, name):

result = self.attributes.get(name)

if result is not None:

return result

raise AttributeError(name)

def write_attribute(self, name, value):

self.attributes[name] = value

def getattr(self, name):

try:

return self.getfield(name)

except AttributeError:

return self.cls.find_method(name)

In this straightforward implementation the methods and attributes are just stored in dictionaries on the classes/instances. While this object model is very simple it already contains all the hard parts of Python's object model. Both instances and classes can have arbitrary fields, and they are changeable at any time. Moreover, instances can change their class after they have been created.

When using this object model in an interpreter, a huge amount of time will be spent doing lookups in these dictionaries. To make the language efficient using a tracing JIT, we need to find a way to get rid of these dictionary lookups somehow.

Let's assume we trace through code that sums three attributes, such as:

inst.getattr("a") + inst.getattr("b") + inst.getattr("c")

The trace could look like this:

# inst.getattr("a")

attributes1 = inst.attributes

result1 = dict.get(attributes1, "a")

guard(result1 is not None)

# inst.getattr("b")

attributes2 = inst.attributes

v1 = dict.get(attributes2, "b")

guard(v1 is None)

cls1 = inst.cls

methods1 = cls.methods

result2 = dict.get(methods1, "b")

guard(result2 is not None)

v2 = result1 + result2

# inst.getattr("c")

attributes3 = inst.attributes

v3 = dict.get(attributes3, "c")

guard(v3 is None)

cls1 = inst.cls

methods2 = cls.methods

result3 = dict.get(methods2, "c")

guard(result3 is not None)

v4 = v2 + result3

return(v4)

In this example, the attribute a is found on the instance, but the attributes b and c are found on the class. The trace indeed contains five calls to dict.get, which is slow.

Making Instance Attributes Faster Using Maps

The first step in making getattr faster in our object model is to optimize away the dictionary lookups on the instances. The hints we have looked at in the two earlier blog posts don't seem to help with the current object model. There is no pure function to be seen, and the instance is not a candidate for promotion, because there tend to be many instances.

This is a common problem when trying to apply hints. Often, the interpreter needs a small rewrite to expose the pure functions and nearly-constant objects that are implicitly there. In the case of instance fields this rewrite is not entirely obvious. The basic idea is as follows. In theory instances can have arbitrary fields. In practice however many instances share their layout (i.e. their set of keys) with many other instances.

Therefore it makes sense to factor the layout information out of the instance implementation into a shared object. This shared layout object is called a map. Maps are an old idea that comes originally from the SELF language. They are also used by many JavaScript implementations such as V8. I've written about maps before, so I won't explain them fully again.

The rewritten Instance class using maps looks like this:

class Map(object):

def __init__(self):

self.attribute_indexes = {}

self.other_maps = {}

@purefunction

def getindex(self, name):

return self.attribute_indexes.get(name, -1)

@purefunction

def new_map_with_additional_attribute(self, name):

if name not in self.other_maps:

newmap = Map()

newmap.attribute_indexes.update(self.attribute_indexes)

newmap.attribute_indexes[name] = len(self.attribute_indexes)

self.other_maps[name] = newmap

return self.other_maps[name]

EMPTY_MAP = Map()

class Instance(object):

def __init__(self, cls):

self.cls = cls

self.map = EMPTY_MAP

self.storage = []

def getfield(self, name):

map = hint(self.map, promote=True)

index = map.getindex(name)

if index != -1:

return self.storage[index]

raise AttributeError(name)

def write_attribute(self, name, value):

map = hint(self.map, promote=True)

index = map.getindex(name)

if index != -1:

self.storage[index] = value

return

self.map = map.new_map_with_additional_attribute(name)

self.storage.append(value)

def getattr(self, name):

try:

return self.getfield(name)

except AttributeError:

return self.cls.find_method(name)

Instances no longer use dictionaries to store their fields. Instead, they have a reference to a map, which maps field names to indexes into a storage list. The storage list contains the actual field values. The maps are shared between objects with the same layout. Therefore they have to be immutable, which means that their getindex method is a pure function. When a new attribute is added to an instance, a new map needs to be chosen, which is done with the new_map_with_additional_attribute method on the previous map. Now that we have introduced maps, it is safe to promote the map everywhere, because we assume that the number of different instance layouts is small.

With this changed instance implementation, the trace we had above changes to the following, where 0xb74af4a8 is the memory address of the Map instance that has been promoted:

# inst.getattr("a")

map1 = inst.map

guard(map1 == 0xb74af4a8)

index1 = Map.getindex(map1, "a")

guard(index1 != -1)

storage1 = inst.storage

result1 = storage1[index1]

# inst.getattr("b")

map2 = inst.map

guard(map2 == 0xb74af4a8)

index2 = Map.getindex(map2, "b")

guard(index2 == -1)

cls1 = inst.cls

methods1 = cls.methods

result2 = dict.get(methods1, "b")

guard(result2 is not None)

v2 = result1 + result2

# inst.getattr("c")

map3 = inst.map

guard(map3 == 0xb74af4a8)

index3 = Map.getindex(map3, "c")

guard(index3 == -1)

cls1 = inst.cls

methods2 = cls.methods

result3 = dict.get(methods2, "c")

guard(result3 is not None)

v4 = v2 + result3

return(v4)

The calls to Map.getindex can be optimized away, because they are calls to a pure function and they have constant arguments. That means that index1/2/3 are constant and the guards on them can be removed. All but the first guard on the map will be optimized away too, because the map cannot have changed in between. The optimized trace looks like this:

# inst.getattr("a")

map1 = inst.map

guard(map1 == 0xb74af4a8)

storage1 = inst.storage

result1 = storage1[0]

# inst.getattr("b")

cls1 = inst.cls

methods1 = cls1.methods

result2 = dict.get(methods1, "b")

guard(result2 is not None)

v2 = result1 + result2

# inst.getattr("c")

cls2 = inst.cls

methods2 = cls2.methods

result3 = dict.get(methods2, "c")

guard(result3 is not None)

v4 = v2 + result3

return(v4)

The index 0 that is used to read out of the storage array is the result of the constant-folded getindex call. This trace is already much better than the original one. Now we are down from five dictionary lookups to just two.

Versioning of Classes

Instances were optimized making the assumption that the total number of Instance layouts is small compared to the number of instances. For classes we will make an even stronger assumption. We simply assume that it is rare for classes to change at all. This is not totally reasonable (sometimes classes contain counters or similar things) but for this simple example it is good enough.

What we would really like is if the Class.find_method method were pure. But it cannot be, because it is always possible to change the class itself. Every time the class changes, find_method can potentially return a new value.

Therefore, we give every class a version number, which is increased every time a class gets changed (i.e., the content of the methods dictionary changes). This means that the result of methods.get() for a given (name, version) pair will always be the same, i.e. it is a pure operation. To help the JIT to detect this case, we factor it out in a helper method which is explicitly marked as @purefunction. The refactored Class looks like this:

class VersionTag(object):

pass

class Class(object):

def __init__(self, name):

self.name = name

self.methods = {}

self.version = VersionTag()

def find_method(self, name):

self = hint(self, promote=True)

version = hint(self.version, promote=True)

result = self._find_method(name, version)

if result is not None:

return result

raise AttributeError(name)

@purefunction

def _find_method(self, name, version):

return self.methods.get(name)

def change_method(self, name, value):

self.methods[name] = value

self.version = VersionTag()

What is interesting here is that _find_method takes the version argument but it does not use it at all. Its only purpose is to make the call pure (because when the version number changes, the result of the call might be different than the previous one).

The trace with this new class implementation looks like this:

# inst.getattr("a")

map1 = inst.map

guard(map1 == 0xb74af4a8)

index1 = Map.getindex(map1, "a")

guard(index1 != -1)

storage1 = inst.storage

result1 = storage1[index1]

# inst.getattr("b")

map2 = inst.map

guard(map2 == 0xb74af4a8)

index2 = Map.getindex(map2, "b")

guard(index2 == -1)

cls1 = inst.cls

guard(cls1 == 0xb7aaaaf8)

version1 = cls1.version

guard(version1 == 0xb7bbbb18)

result2 = Class._find_method(cls, "b", version1)

guard(result2 is not None)

v2 = result1 + result2

# inst.getattr("c")

map3 = inst.map

guard(map3 == 0xb74af4a8)

index3 = Map.getindex(map3, "c")

guard(index3 == -1)

cls2 = inst.cls

guard(cls2 == 0xb7aaaaf8)

version2 = cls2.version

guard(version2 == 0xb7bbbb18)

result3 = Class._find_method(cls, "c", version2)

guard(result3 is not None)

v4 = v2 + result3

return(v4)

The calls to Class._find_method can now be optimized away, also the promotion of the class and the version, except for the first one. The final optimized trace looks like this:

# inst.getattr("a")

map1 = inst.map

guard(map1 == 0xb74af4a8)

storage1 = inst.storage

result1 = storage1[0]

# inst.getattr("b")

cls1 = inst.cls

guard(cls1 == 0xb7aaaaf8)

version1 = cls1.version

guard(version1 == 0xb7bbbb18)

v2 = result1 + 41

# inst.getattr("c")

v4 = v2 + 17

return(v4)

The constants 41 and 17 are the results of the folding of the _find_method` calls. This final trace is now very good. It no longer performs any dictionary lookups. Instead it contains several guards. The first guard checks that the map is still the same. This guard will fail if the same code is executed with an instance that has another layout. The second guard checks that the class of inst is still the same. It will fail if trace is executed with an instance of another class. The third guard checks that the class did not change since the trace was produced. It will fail if somebody calls the change_method method on the class.

Real-World Considerations

The techniques used above for the simple object model are used for the object model of PyPy's Python interpreter too. Since Python's object model is considerably more complex, some additional work needs to be done.

The first problem that needs to be solved is that Python supports (multiple) inheritance. Therefore looking up a method in a class needs to consider the whole method resolution order. This makes the versioning of classes more complex. If a class is changed its version changes. At the same time, the versions of all the classes inheriting from it need to be changed as well, recursively. This makes class changes expensive, but they should be rare. On the other hand, a method lookup in a complex class hierarchy is as optimized in the trace as in our object model here.

A downside of the versioning of classes that we haven't yet fixed in PyPy, is that some classes do change a lot. An example would be a class that keeps a counter of how many instances have been created so far. This is very slow right now, but we have ideas about how to fix it in the future.

Another optimization is that in practice the shape of an instance is correlated with its class. In our code above, we allow both to vary independently. In PyPy's Python interpreter we act somewhat more cleverly. The class of an instance is not stored on the instance itself, but on the map. This means that we get one fewer promotion (and thus one fewer guard) in the trace, because the class doesn't need to be promoted after the map has been.

More General Patterns

The techniques we used above to make instance and class lookups faster are applicable in more general cases than the one we developed them for. A more abstract view of maps is that of splitting a data-structure into a part that changes slowly, and a part that changes quickly. In the concrete example of maps we split the original dictionary into the map (the slow-changing part) and the storage array (the quick-changing part). All the computation on the slow-changing part can be constant-folded during tracing so that only the manipulation of the quick-changing part remains.

Similarly, versions can be used to constant-fold arbitrary functions of large data structures. The version needs to be updated carefully every time the result of this function can change. Therefore this is useful only if the data structure is expected to change slowly.

Conclusion

In this post I showed how to use purefunction and promote to make a small but still relevant dynamic object model no longer use any dictionary lookups after tracing. Instead a number of guards are inserted into the trace to check whether the assumptions about the objects are still true. This makes operations on objects seriously faster. I plan to write another small post that shows the speed benefits for PyPy's Python interpreter for exactly these operations.

Very clever indeed.

I think and additional speedup can be achieved

by using a technique from smalltalk intrepters: Method lookup cache.

The cache is organized so that function

cache(class, method) returns a pointer to the method.

The early Smalltalk implementors reported pretty spectacular speedups when this cache was implemented.

@vadiml: the jit+version tags already acts as method lookup cache for jited code

it basically inlines lookup(class, method)

I'm wondering about VersionTag(). The guard you've shown looks at its memory address. Doesn't PyPy use compacting garbage collectors? I seem to recall that from earlier posts about the cost of id().

Hmm. And now I think I know why twisted isn't any faster in pypy. I remember looking at the source a few years ago and being horrified to see that they were changing class methods during runtime. I guessed to avoid one layer of dispatch in state machines. Anyway, it's an "optimisation" that will hurt pypy.

@Marius: You are right. The trace is a bit simplified, in practice there is an indirection so that if the GC moves the object, the trace still works.

@Anonymous: can you find that place in twisted? would be very interesting to see. Also it probably means we should implement these ideas about making changing classes not quite so inefficient.

Controlling the Tracing of an Interpreter With Hints, Part 2: Controlling Optimization

This is part 2 of a series on how to speed up an interpreter written with PyPy by adding JIT hints to the interpreter. Part 1 described how to control the extent of tracing. In this post I will describe how to add hints that influence the optimizer. If applied correctly these techniques can give really big speedups by pre-computing parts of what happens at runtime. On the other hand, if applied incorrectly they might lead to code bloat, thus making the resulting program actually slower.

Background

Before sending the trace to the backend to produce actual machine code, it is optimized. The optimizer applies a number of techniques to remove or reduce the number of operations: most of these are well known compiler optimization techniques, with the difference that it is easier to apply them in a tracing JIT because it only has to deal with linear traces. Among the techniques:

- constant folding

- common subexpression elimination

- allocation removal, as described in the paper that I recently presented at PEPM

- store/load propagation

- loop invariant code motion

In some places it turns out that if the interpreter author rewrites some parts of the interpreter with these optimizations in mind the traces that are produced by the optimizer can be vastly improved.

In this post I will describe two hints that allow the interpreter author to increase the optimization opportunities for constant folding. For constant folding to work, two conditions need to be met:

- the arguments of an operation actually need to all be constant, i.e. statically known by the optimizer

- the operation needs to be pure, i.e. always yield the same result given the same arguments.

The PyPy JIT generator automatically detects the majority of these conditions. However, for the cases in which the automatic detection does not work, the interpreter author can apply hints to improve the optimization opportunities. There is one kind of hint for both of the conditions above.

Note: These hints are written by an interpreter developer and applied to the RPython source of the interpreter. Normal Python users will never see them.

Where Do All the Constants Come From

It is worth clarifying what is a "constant" in this context. A variable of the trace is said to be constant if its value is statically known by the optimizer.

The simplest example of constants are literal values. For example, if in the RPython source code we have a line like y = x + 1, the second operand will be a constant in the trace.

However, the optimizer can statically know the value of a variable even if it is not a constant in the original source code. For example, consider the following fragment of RPython code:

if x == 4:

y = y + x

If the fragment is traced with x being 4, the following trace is produced:

guard(x == 4) y = y + x

In the trace above, the value of x is statically known thanks to the guard. Remember that a guard is a runtime check. The above trace will run to completion when x == 4. If the check fails, execution of the trace is stopped and the interpreter continues to run.

There are cases in which it is useful to turn an arbitrary variable into a constant value. This process is called promotion and it is an old idea in partial evaluation (it's called "the trick" there). Promotion is also heavily used by Psyco and by all older versions of PyPy's JIT. Promotion is a technique that only works well in JIT compilers, in static compilers it is significantly less applicable.

Promotion is essentially a tool for trace specialization. In some places in the interpreter it would be very useful if a variable were constant, even though it could have different values in practice. In such a place, promotion is used. The typical reason to do that is if there is a lot of computation depending on the value of that variable.

Let's make this more concrete. If we trace a call to the following function:

def f1(x, y):

z = x * 2 + 1

return z + y

We get a trace that looks like this:

v1 = x * 2 z = v1 + 1 v2 = z + y return(v2)

Observe how the first two operations could be constant-folded if the value of x were known. Let's assume that the value of x can vary, but does so rarely, i.e. only takes a few different values at runtime. If this is the case, we can add a hint to promote x, like this:

def f2(x, y):

x = hint(x, promote=True)

z = x * 2 + 1

return z + y

The meaning of this hint is that the tracer should pretend that x is a constant in the code that follows. When just running the code, the function has no effect, as it simply returns its first argument. When tracing, some extra work is done. Let's assume that this changed function is traced with the arguments 4 and 8. The trace will be the same, except for one operation at the beginning:

guard(x == 4) v1 = x * 2 z = v1 + 1 v2 = z + y return(v2)

The promotion is turned into a guard operation in the trace. The guard captures the value of x as it was at runtime. From the point of view of the optimizer, this guard is not any different than the one produced by the if statement in the example above. After the guard, the rest of the trace can assume that x is equal to 4, meaning that the optimizer will turn this trace into:

guard(x == 4) v2 = 9 + y return(v2)

Notice how the first two arithmetic operations were constant folded. The hope is that the guard is executed quicker than the multiplication and the addition that was now optimized away.

If this trace is executed with values of x other than 4, the guard will fail, and execution will continue in the interpreter. If the guard fails often enough, a new trace will be started from the guard. This other trace will capture a different value of x. If it is e.g. 2, then the optimized trace looks like this:

guard(x == 2) v2 = 5 + y return(v2)

This new trace will be attached to the guard instruction of the first trace. If x takes on even more values, a new trace will eventually be made for all of them, linking them into a chain. This is clearly not desirable, so we should promote only variables that don't vary much. However, adding a promotion hint will never produce wrong results. It might just lead to too much assembler code.

Promoting integers, as in the examples above, is not used that often. However, the internals of dynamic language interpreters often have values that are variable but vary little in the context of parts of a user program. An example would be the types of variables in a user function. Even though in principle the argument to a Python function could be any Python type, in practise the argument types tend to not vary much. Therefore it is possible to promote the types. In the next blog post I will give a complete example for how this works.

Declaring New Pure Operations

In the last section we saw a way to turn arbitrary variables into constants. All pure operations on these constants can be constant-folded. This works great for constant folding of simple types, e.g. integers. Unfortunately, in the context of an interpreter for a dynamic language, most operations actually manipulate objects, not simple types. The operations on objects are often not pure and might even have side-effects. If one reads a field out of a constant reference to an object this cannot necessarily be folded away because the object can be mutated. Therefore, another hint is needed.

As an example, take the following class:

class A(object):

def __init__(self, x, y):

self.x = x

self.y = y

def f(self, val):

self.y = self.compute() + val

def compute(self):

return self.x * 2 + 1

Tracing the call a.f(10) of some instance of A yields the following trace (note how the call to compute is inlined):

x = a.x v1 = x * 2 v2 = v1 + 1 v3 = v2 + val a.y = v3

In this case, adding a promote of self in the f method to get rid of the computation of the first few operations does not help. Even if a is a constant reference to an object, reading the x field does not necessarily always yield the same value. To solve this problem, there is another annotation, which lets the interpreter author communicate invariants to the optimizer. In this case, she could decide that the x field of instances of A is immutable, and therefore compute is a pure function. To communicate this, there is a purefunction decorator. If the code in compute should be constant-folded away, we would change the class as follows:

class A(object):

def __init__(self, x, y):

self.x = x

self.y = y

def f(self, val):

self = hint(self, promote=True)

self.y = self.compute() + val

@purefunction

def compute(self):

return self.x * 2 + 1

Now the trace will look like this:

guard(a == 0xb73984a8) v1 = compute(a) v2 = v1 + val a.y = v2

Here, 0xb73984a8 is the address of the instance of A that was used during tracing. The call to compute is not inlined, so that the optimizer has a chance to see it. Since compute function is marked as pure, and its argument is a constant reference, the call will be removed by the optimizer. The final trace looks like this:

guard(a == 0xb73984a8) v2 = 9 + val a.y = v2

(assuming that the x field's value is 4).

On the one hand, the purefunction annotation is very powerful. It can be used to constant-fold arbitrary parts of the computation in the interpreter. However, the annotation also gives you ample opportunity to mess things up. If a function is annotated to be pure, but is not really, the optimizer can produce subtly wrong code. Therefore, a lot of care has to be taken when using this annotation.

Observably Pure Functions

Why can't we simply write an analysis to find out that the x fields of the A instances is immutable and deduce that compute is a pure function, since it only reads the x field and does not have side effects? This might be possible in this particular case, but in practice the functions that are annotate with the purefunction decorator are usually more complex. The easiest example for this is that of a function that uses memoization to cache its results. If you analyze this function, it looks like the function has side effects, because it changes the memoizing dictionary. However, because this side effect is not externally visible, the function from the outside is pure. This is a property that is not easily detectable by analysis. Therefore, the purity of this function needs to be annotated.

Immutable Fields

One of the most common cases of pure functions is reading immutable values out of objects. Since this is so common, we have special syntactic sugar for it. A RPython class can have a class attribute _immutable_fields_ set to a list of strings, listing the fields that cannot be changed. This is equivalent to using getters and annotating them with purefunction.

Conclusion

In this blog post I explained two more hints that can be used in the source code of the interpreter. They are used to influence what the optimizer does with the trace. I realize the examples given here are a bit too small, in the next installment I will give a worked-out example that puts all the pieces together.

Again a very interesting post. I would like some precisions for one sentence:

"If x takes on even more values, a new trace will eventually be made for all of them, linking them into a chain."

Does it mean they are all tried in sequence, or is there some dispatch mechanism? If there isn't, wouldn't it be beneficial to have one in place (probably using a hash table of some sort) when there is more than a few values? Or is the number of "generated branches" never supposed to be large enough to make such an approach worthwile?

Controlling the Tracing of an Interpreter With Hints, Part 1: Controlling the Extent of Tracing

The question I was asked most often during my recent US trip was how exactly the hints work that interpreter authors can use to improve the execution speed of the programs running on their interpreters. Since those hints are not really documented all that well, I decided to write blog posts about them. This is the first one.

Background

First, let's recap some basics: PyPy's approach to implementing dynamic languages is to write an interpreter for the language in RPython. This interpreter can be translated to C and then further to machine code. The interpreter consists of code in the form of a large number of generated C functions and some data. Similarly, the user program consists of functions in the language the interpreter executes.

As was explained in a blog post and a paper two years ago, PyPy's JIT is a meta-tracer. Since we want to re-use our tracer for a variety of languages, we don't trace the execution of the user program, but instead trace the execution of the interpreter that is running the program. This means that the traces don't contain the bytecodes of the language in question, but RPython-level operations that the interpreter did to execute the program.

On the other hand, the loops that are traced by the tracer are the loops in the user program. This means that the tracer stops tracing after one iteration of the loop in the user function that is being considered. At this point, it can have traced many iterations of the interpreter main loop.

Here's a diagram of this process:

On the left you see the levels of execution. The CPU executes the binary of PyPy's Python interpreter, which consists of RPython functions that have been compiled first to C, then to machine code. Some of these functions contain loops, others don't. The interpreter runs a Python program written by a programmer (the user). If the tracer is used, it traces operations on the level of the interpreter. However, the extent of the trace is determined by the loops in the user program.

How Far Should Tracing Go

When the tracer encounters a function call at the interpreter level, e.g. the interpreter main loop calling a helper function, it can do one of two things:

- it can trace into the helper function, effectively inlining it into the trace.

- it can not trace into the function and instead record a call to that function as an operation in the trace. Such a call operation in the trace is sometimes called residual call.

As a default, the tracer will try to trace into the helper because that will give more information to the optimizer, allowing it to do a better job. This is particularly important for the allocation removal optimization, because if a freshly allocated object is passed as an argument to a residual call, its allocation cannot be optimized away.