Improving Memory Behaviour to Make Self-Hosted PyPy Translations Practical

In our previous blog post, we talked about how fast PyPy can translate itself compared to CPython. However, the price to pay for the 2x speedup was an huge amount of memory: actually, it was so huge that a standard -Ojit compilation could not be completed on 32-bit because it required more than the 4 GB of RAM that are addressable on that platform. On 64-bit, it consumed 8.3 GB of RAM instead of the 2.3 GB needed by CPython.

This behavior was mainly caused by the JIT, because at the time we wrote the blog post the generated assembler was kept alive forever, together with some big data structure needed to execute it.

In the past two weeks Anto and Armin attacked the issue in the jit-free branch, which has been recently merged to trunk. The branch solves several issues. The main idea of the branch is that if a loop has not been executed for a certain amount of time (controlled by the new loop_longevity JIT parameter) we consider it "old" and no longer needed, thus we deallocate it.

(In the process of doing this, we also discovered and fixed an oversight in the implementation of generators, which led to generators being freed only very slowly.)

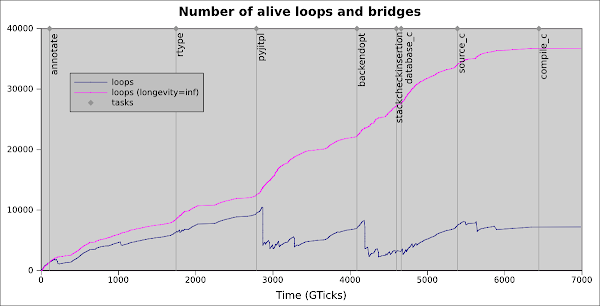

To understand the freeing of loops some more, let's look at how many loops are actually created during a translation. The purple line in the following graph shows how many loops (and bridges) are alive at any point in time with an infinite longevity, which is equivalent to the situation we had before the jit-free branch. By contrast, the blue line shows the number of loops that you get in the current trunk: the difference is evident, as now we never have more than 10000 loops alive, while previously we got up to about 37000 ones. The time on the X axis is expressed in "Giga Ticks", where a tick is the value read out of the Time Stamp Counter of the CPU.

The grey vertical bars represent the beginning of each phase of the translation:

- annotate performs control flow graph construction and type inference.

- rtype lowers the abstraction level of the control flow graphs with types to that of C.

- pyjitpl constructs the JIT.

- backendopt optimizes the control flow graphs.

- stackcheckinsertion finds the places in the call graph that can overflow the C stack and inserts checks that raise an exception instead.

- database_c produces a database of all the objects the C code will have to know about.

- source_c produces the C source code.

- compile_c calls the compiler to produce the executable.

You can nicely see, how the number of alive graphs drops shortly after the beginning of a new phase.

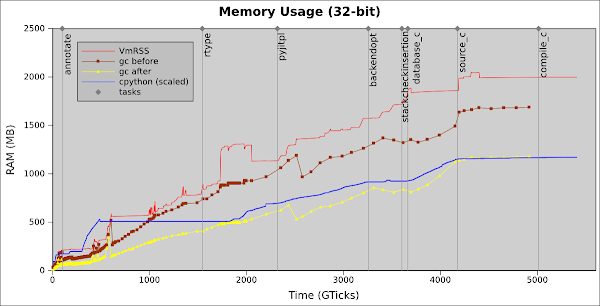

Those two fixes, freeing loops and generators, improve the memory usage greatly: now, translating PyPy on PyPy on 32-bit consumes 2 GB of RAM, while on CPython it consumes 1.1 GB. This result can even be improved somewhat, because we are not actually freeing the assembler code itself, but only the large data structures around it; we can consider it as a residual memory leak of around 150 MB in this case. This will be fixed in the jit-free-asm branch.

The following graph shows the memory usage in more detail:

- the blue line (cpython-scaled) shows the total amount of RAM that the OS allocates for CPython. Note that the X axis (the time) has been scaled down so that it spans as much as the PyPy one, to ease the comparison. Actually, CPython took more than twice as much time as PyPy to complete the translation

- the red line (VmRss) shows the total amount of RAM that the OS allocates for PyPy: it includes both the memory directly handled by our GC and the "raw memory" that we need to allocate for other tasks, such as the assembly code generated by the JIT

- the brown line (gc-before) shows how much memory is used by the GC before each major collection

- the yellow line (gc-after) shows how much memory is used by the GC after each major collection: this represent the amount of memory which is actually needed to hold our Python objects. The difference between gc-before and gc-after (the GC delta) is the amout of memory that the GC uses before triggering a new major collection

By comparing gc-after and cpython-scaled, we can see that PyPy uses mostly the same amount of memory as CPython for storing the application objects (due to reference counting the memory usage in CPython is always very close to the actually necessary memory). The extra memory used by PyPy is due to the GC delta, to the machine code generated by the JIT and probably to some other external effect (such as e.g. Memory Fragmentation).

Note that the GC delta can be set arbitrarly low (another recent addition -- the default value depends on the actual RAM on your computer; it probably works to translate if your computer has precisely 2 GB, because in this case the GC delta and thus the total memory usage will be somewhat lower than reported here), but the cost is to have more frequent major collections and thus a higher run-time overhead. The same is true for the memory needed by the JIT, which can be reduced by telling the JIT to compile less often or to discard old loops more frequently. As often happens in computer science, there is a trade-off between space and time, and currently for this particular example PyPy runs twice as fast as CPython by doubling the memory usage. We hope to improve even more on this trade-off.

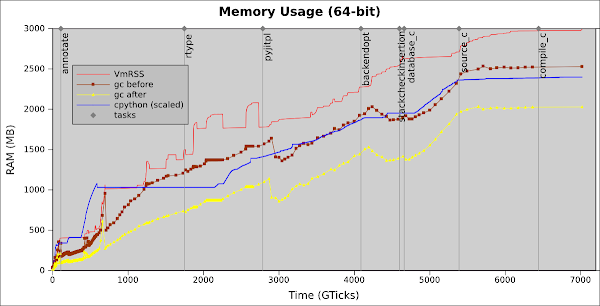

On 64-bit, things are even better as shown by the the following graph:

The general shape of the lines is similar to the 32-bit graph. However, the relative difference to CPython is much better: we need about 3 GB of RAM, just 24% more than the 2.4 GB needed by CPython. And we are still more than 2x faster!

The memory saving is due (partly?) to the vtable ptr optimization, which is enabled by default on 64-bit because it has no speed penalty (see Unifying the vtable ptr with the GC header).

The net result of our work is that now translating PyPy on PyPy is practical and takes less than 30 minutes. It's impressive how quickly you get used to translation taking half the time -- now we cannot use CPython any more for that because it feels too slow :-).

Comments

Big huge improvement since last post. Kudos!! :-)

Please don't get me wrong, but I need to ask: is there any plan to merge pypy into cPython (or even replace it)?

BTW, I'm following the blog (please, keep this regular posts) and planing to make a donation to support your next sprint due to the regular and very well done work.

congratulations again.

This is incredibly cool! Congrats!

This is amazing. It was kind of a let down when you reported it used too much memory. But now I can on my laptop translate pypy in 32 and 64 bits using pypy itself :)

The world is good again :)

@crncosta

There are no plans for merging PyPy to CPython. I don't think "replacing" is a good word, but you can use PyPy for a lot of things already, so it is a viable Python implementation together with CPython.

I am curious... Has there ever been interest from Google to sponsor this project?

I know about unladen swallow, but has anyone there expressed interest in using pypy somewhere in their organization?

Sorry for the off topic question...

Always fascinating to read about the work you're doing. Please keep posting, and keep up the good work. You really are heroes.

Wow! this is great news.. keep us posted on what other developments you have.

like luis i am also curious about why google doesn't show a lot more interest in pypy. unladen swallow didn't really work out or did it?

Kudos!

KUTGW!!

Congrats!